Key Takeaways

- Off-the-shelf LLMs create hidden costs and risk.

- Custom models align better with payer workflows.

- You gain auditability, prompt control, and IP ownership.

- Strategic migration minimizes disruption, maximizes ROI.

- Governance and reuse turn LLMs into infrastructure.

Off-the-shelf LLMs are like rental cars.

They’re clean, convenient, and already gassed up. Great for a quick run across town. But start using them to commute, deliver, and build a business, and the math breaks.

Because reality shows up.

A prompt that used to summarize claims accurately now injects phrasing your compliance team flags. A model update quietly rolls out, and your appeal explainer starts sounding like a chatbot. Your token bill doubles, but no one can explain why. And when you ask for support, you’re told to wait for “the next model release.”

This is the hidden tax of off-the-shelf AI. You don’t see it on day one. But you feel it by quarter two especially if your workflows involve regulated language, member-facing outputs, or plan-specific nuance.

For payer organizations, where model behavior must align with CMS, HIPAA, and internal QA rules, “off-the-shelf” quickly becomes off-the-mark.

Custom LLMs don’t eliminate cost. But they move it from reactive to strategic. You invest upfront, yes. But you trade guesswork for control. You move from vendor drift to prompt traceability. From token inflation to unit-cost optimization. And from generic language to operational alignment.

This post isn’t about bashing commercial models. It’s about recognizing the point where they stop serving your business. And why custom LLMs, when built correctly, don’t just improve ROI. They reset the rules entirely.

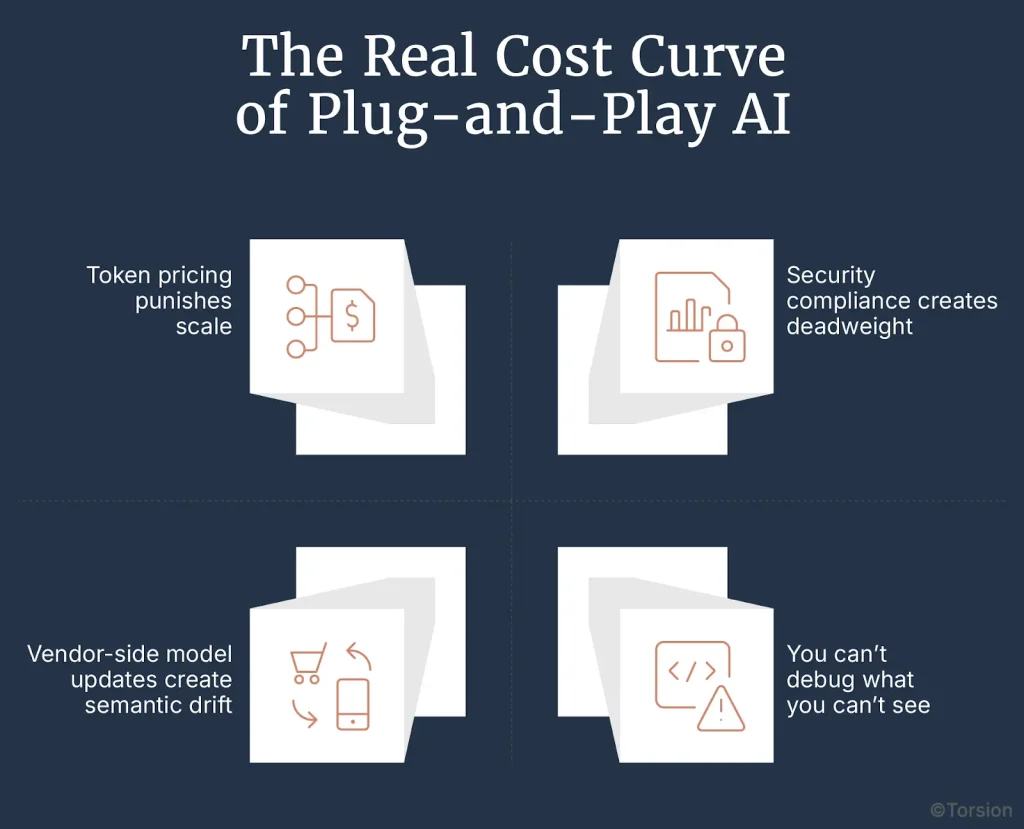

The Real Cost Curve of Plug-and-Play AI

Off-the-shelf LLMs introduce hidden operational costs as usage grows. Payers face token inefficiencies, unpredictable model updates, limited debugging visibility, and repeated security audits. These challenges increase risk and reduce control, making vendor-owned models unsustainable for regulated, high-scale healthcare workflows.

Scaling with plug-and-play LLM APIs creates long-term drag. Token billing accelerates without efficiency, updates trigger silent workflow breaks, and lack of model observability blocks compliance. For payer orgs, this leads to mounting operational debt and brittle infrastructure that can’t evolve with business needs.

That rental car? It doesn’t just cost more over time. It drives differently every week, burns fuel unpredictably, and won’t let you touch the engine.

Off-the-shelf LLMs work the same way. They promise ease but in production, they extract value from scale while limiting control. What looks like plug-and-play turns into plug-and-pay.

Token pricing punishes scale

You don’t control how the model allocates tokens across prompt history, internal reasoning, or output verbosity. So as usage grows, cost scales faster than value. A single member inquiry can trigger multi-turn prompts, redundant context injection, and verbose replies. You’re billed for every word even the ones you would’ve edited out.

Vendor-side model updates create semantic drift

LLMs are non-deterministic by nature. Now pair that with invisible backend upgrades. The same prompt that delivered a clean, CMS-aligned explanation last week now introduces risky phrasing. No version pinning. No diff tools. No rollback path. You’re left auditing behavior you can’t influence.

You can’t debug what you can’t see

A flawed explanation reaches a member. Is it the prompt? The model’s internal compression logic? A token budget cut? Good luck finding out. API-only access means zero observability. No logs. No token traces. No visibility into routing or fallback logic.

Security compliance creates deadweight

Each new integration invites a new round of security reviews, data flow mappings, and risk assessments. HIPAA teams don’t care if the API is stable. They care where the prompt data goes, who can access the outputs, and what happens if a member disputes what the model said.

This is the true cost curve. Not just in dollars, but in lost control.

And the longer you scale on someone else’s model, the more brittle your workflows become until the thing you’re depending on can’t even be audited, let alone improved.

Why Custom LLMs Win on Unit Economics

Custom LLMs reduce long-term costs by eliminating token-based billing, improving domain alignment, and enabling prompt-level control. Payers gain traceable outputs, reduced human correction cycles, and cross-functional model reuse. With strategic tuning and infrastructure ownership, unit economics shift from reactive spend to scalable efficiency.

Custom-built LLMs let payer orgs optimize every layer of performance. Fine-tuned models generate fewer errors, prompt logic cuts token waste, and reuse across departments compounds ROI. The result is a system that’s cheaper per interaction, more compliant by design, and aligned to evolving operational needs.

So what does control look like?

It looks like a model that doesn’t overgenerate because your prompt logic accounts for structure and stop conditions. It looks like token budgets tuned by use case. It looks like outputs you can inspect, debug, and optimize because you own the stack.

And most importantly, it looks like cost curves you shape, not react to.

One-time setup, long-term leverage

The initial lift fine-tuning, infrastructure deployment, system integration can feel heavy. But once the stack is live, the economics flip. You’re not billed per token. You’re orchestrating inference across GPU-optimized nodes, using memory-efficient routing and cached templates for repeated queries. Cost per interaction drops as volume increases, not the other way around.

Domain alignment slashes correction cycles

Off-the-shelf models need layers of prompt engineering just to sound like they understand healthcare. A custom model starts with your plan documents, SOPs, denials, and real member interactions. That context reduces semantic error. It also cuts post-processing cycles. When the model speaks in your tone and understands your structure, human intervention shifts from rewriting to light review.

Prompt logic becomes a performance lever

With open control, you can run prompt A/B tests at scale, optimize for semantic compression, and shorten token context windows without dropping accuracy. You can create hierarchical prompt chains starting small, escalating only when the input complexity demands it. And you can route by cost class: simple queries to smaller models, complex ones to your fine-tuned heavyweight.

Reuse across verticals compounds savings

You’re not just building a model for one function. You’re creating a platform. Claims ops, member services, appeals, internal compliance each can call the same model instance, with different prompt layers and context logic. No duplication. No vendor lock-ins per use case. Just one behavioral foundation, scaled horizontally across the org.

Strategic Payoff Beyond the Balance Sheet

Custom LLMs give payer organizations strategic control beyond cost savings. With internal fine-tuning, teams encode proprietary knowledge, adapt faster to CMS and policy updates, and eliminate vendor dependencies. Transparent audit trails and prompt-level visibility build trust and support compliance, making AI infrastructure a long-term strategic asset.

Owning your LLM infrastructure means more than efficiency it enables differentiation. Payers can embed institutional logic, update AI workflows in sync with policy changes, and scale trust through explainability and version control. The strategic edge lies in behavior you control, not vendor-limited automation.

Lower cost is the starting point. But for payer organizations, the bigger win is what cost control unlocks.

Once you own the LLM infrastructure, you’re no longer negotiating performance you’re shaping it. You shift from managing vendors to operationalizing intelligence across your systems, at your pace, with your logic.

This is where custom LLMs go from efficient to strategic.

You build competitive IP not just another LLM instance

Every payer has its own way of framing coverage. The tone in denial letters. The flow of member FAQs. The nuance in plan tiering. When you fine-tune a model on your documents, member comms, and case histories, you’re codifying that voice. No other org can replicate it. That becomes a strategic asset not just automation, but embedded expertise.

You respond to policy change faster than the market

When CMS updates a regulation or a state law shifts benefit language, you don’t wait for a vendor roadmap. You adjust prompts, retrain specific modules, re-test outputs in staging. With internal tuning, you cut response time from weeks to days staying ahead of compliance exposure, not reacting to it.

You reduce systemic fragility by eliminating third-party bottlenecks

When every escalation depends on a vendor ticket, you’re bottlenecked. When you run your own inference stack, you define the escalation paths. You route by risk. You implement safety logic. You scale up or roll back without external approval. That agility becomes operational muscle.

You operationalize trust through visibility

Custom LLMs give you version history, prompt lineage, and inference logs. You don’t just tell auditors “we fixed it” you show them how, when, and why. That traceability doesn’t just satisfy compliance. It builds internal confidence. The more people can see the system work, the more they trust it to scale.

In regulated industries, trust isn’t earned by performance alone. It’s earned by behavior. And behavior must be observable, governable, and consistent even under pressure.

That’s the edge custom models deliver. Not just better economics but infrastructure that moves with your business, not ahead of it or behind it.

How to Shift Without Disruption

Payer orgs can migrate to custom LLMs safely using phased deployment. Start with internal workflows, run parallel inference, and integrate governance from day one. Use domain-specific data for fine-tuning and validate behavior before scaling. This approach minimizes risk while building toward audit-ready, production-grade intelligence.

The shift to custom LLMs doesn’t require ripping out existing systems. Payers can begin in non-member-facing workflows, test outputs in parallel, and fine-tune on plan-specific data. Governance should be embedded early prompt versioning, audit trails, and fallback logic to support safe, scalable deployment across regulated AI environments.

By now, the case for custom LLMs is clear. But moving from vendor-hosted models to self-governed infrastructure isn’t a weekend project. It takes technical sequencing, organizational buy-in, and the ability to show progress without creating risk.

Fortunately, payer orgs don’t need to start from zero. Most already have workflows, data, and governance layers that can anchor the shift. The goal isn’t speed. It’s precision.

Here’s how high-maturity teams are making the transition without disruption.

Start behind the scenes where mistakes don’t escalate

Before touching member comms, deploy custom LLMs in internal tools. Claim summarization, benefit validation, and pre-review QA flows give you measurable volume and well-understood output requirements. If a response is off, it’s corrected internally. That creates a safe space to test prompt logic, monitor drift, and benchmark real-world ROI.

Run inference side by side so nothing gets missed

Dual-routing isn’t just a best practice. It’s essential. Mirror the same input across both your proprietary and custom LLM stacks. Analyze not just output accuracy, but tone fidelity, token efficiency, and audit-readiness. This lets teams validate semantic quality under live load before making cutovers that affect production.

Operationalize governance early before it slows you down

Don’t treat prompt version control, response logging, or audit tagging as post-launch features. Bake them into the pilot. Every prompt edit should be tracked. Every model update documented. Every hallucination or policy misread classified by severity. That baseline becomes your safety net as use cases grow more complex.

Use your own data but don’t treat it all equally

Start with clean, high-signal sources. Past denials, appeal responses, plan docs, and internal comms templates provide high-leverage examples for fine-tuning. Avoid overfitting on noisy transcripts or overly broad data dumps. The tighter the domain alignment, the fewer the corrections later.

The Hidden Tax Isn’t Just Money. It’s Momentum

Token costs can be tracked. Vendor fees can be forecasted. But the real cost of off-the-shelf LLMs isn’t just financial, rather a strategic inertia.

Every time a model update breaks your workflow, every time you need a workaround for tone control, every time your compliance team flags an output and you can’t trace why you’re losing time. Not just in hours, but in organizational velocity.

Payer orgs that stick with black-box models aren’t just paying more. They’re falling behind.

Because custom LLMs aren’t just cheaper in the long run. They’re smarter in the short run. They adapt faster, behave consistently, and reflect the policies, tone, and trust signals that define your member experience.

This is about more than ROI. It’s about building systems that move at the speed of your business and don’t drag it down.

And as the industry matures, the divide will be clear: those who own their intelligence, and those who rent it at a premium.

The question isn’t whether you can afford to go custom. It’s whether you can afford to keep waiting.