Key Takeaways

- Poor interoperability costs U.S. healthcare $30B annually

- Most health systems still operate on fragmented legacy systems

- FHIR adoption is improving but uneven across vendors

- Enterprise AI fails without clean, connected, governed data

- Open-source stacks provide scalable, secure pathways to unlock value

- Federal mandates like TEFCA and the Cures Act are accelerating change

- Interoperability isn’t a tech problem, but a systems decision

Healthcare’s data fragmentation problem is a nagging problem, and quite expensive to say the least.

According to the Center for Interoperability and Healthcare Information Technology, poor data exchange costs the U.S. healthcare system more than $30 billion a year. That figure reflects time lost to manual reconciliation, duplicative testing, system rework, and brittle one-off integrations that barely hold under pressure.

But the financial cost is only part of the picture.

The Human Cost Is Everywhere

The breakdown is technical and it plays out in real workflows.

- A patient waits longer for treatment because imaging from a different facility didn’t transfer in time.

- A nurse repeats vitals because earlier readings weren’t visible.

- A clinician misses a critical note because it was buried in a disconnected system.

These moments add up. They cause delays, duplication, and in some cases, real harm.

And on the clinician side? More time spent toggling systems means less time at the bedside and more time charting what someone else already documented.

What Needs to Change

Enterprise AI has the power to unlock precision, speed, and automation but not without foundational interoperability.

Here’s the thesis:

Open-source Enterprise AI, when paired with strong data governance and real FHIR alignment, can finally break through the walls, offering a system-wide path that’s more transparent, cost-effective, and scalable than proprietary patchwork.

This is about rewiring what’s already there: cleanly, ethically, and with tools built to connect.

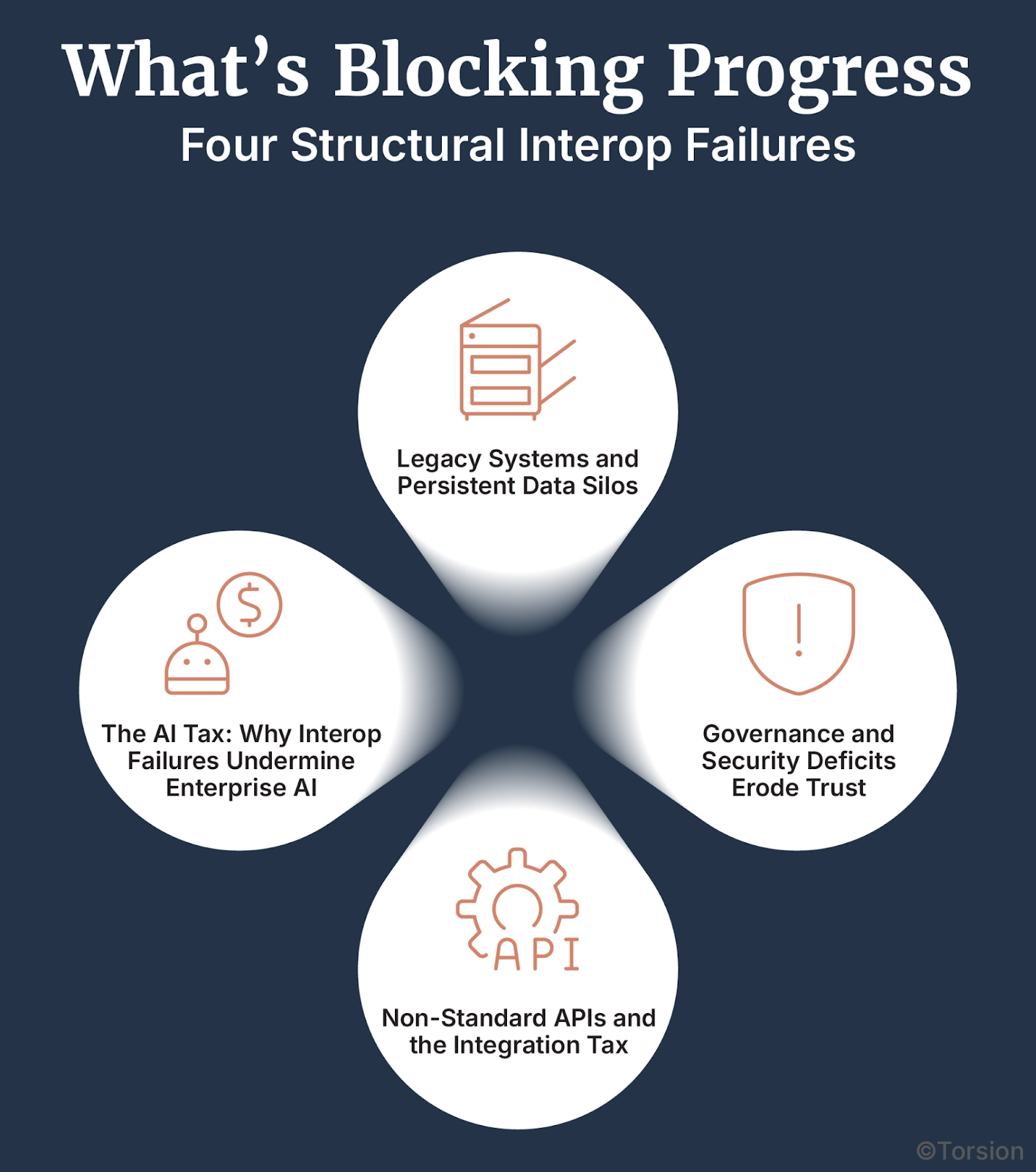

What’s Blocking Progress: Four Structural Interop Failures

The cost of broken interoperability is clear. What’s less visible, but more dangerous, are the systemic blockers that keep it broken.

Healthcare doesn’t lack standards. It lacks adoption, alignment, and architecture. Below are the four structural issues that keep the system fragmented and stall every Enterprise AI initiative built on top of it.

A. Legacy Systems and Persistent Data Silos

Healthcare IT infrastructure is overloaded with proprietary platforms and legacy EHRs that were never built to talk to each other.

- Many organizations operate hundreds of disconnected systems, each siloed by department or vendor

- Schema mismatches between coding systems (ICD-10, SNOMED CT, CPT) add friction

- APIs are often closed. Event streaming? Rare.

- Key datasets like LIS, RIS, and PACS remain locked in point systems

- Up to 80% of healthcare data is unstructured, making unification even harder

The result? A heavy drag on operations and enormous integration overhead: custom extract builds, brittle workarounds, and vendor lock-in at every layer.

B. Non-Standard APIs and the Integration Tax

When APIs aren’t aligned to a universal contract, every integration becomes bespoke.

- Without standardization, teams are stuck with fragile point-to-point connections

- Development teams waste cycles recreating the same glue code across vendors

- Costs rise fast due to both duplicative builds and long-term vendor lock-in

FHIR has promise. And adoption is improving 90% of health systems are expected to adopt FHIR APIs by 2025. But until implementation is consistent, the integration tax persists.

C. Governance and Security Deficits Erode Trust

Even within a single organization, teams hesitate to share data. Why? Because governance isn’t clear and risk isn’t shared.

- PHI breaches and HIPAA/GDPR penalties create fear

- Public trust suffers when systems leak or fail audits

- Many orgs lack basic role-based access controls (RBAC), encryption policies, or audit trails

You can’t build Enterprise AI on a dataset no one’s willing to share. Without policy-first architecture, interoperability stops before it starts.

D. The AI Tax: Why Interop Failures Undermine Enterprise AI

Even when the models are ready, the data rarely is.

Teams spend 50–80% of their time just cleaning and normalizing data across fragmented sources. That’s time not spent building models, validating outcomes, or improving care.

The cost?

- Failed PoCs that never graduate to production

- Underperforming tools built on partial or stale inputs

- ROI that evaporates the moment pilot funding ends

Enterprise AI only works when the data behind it moves: cleanly, securely, and in real time.

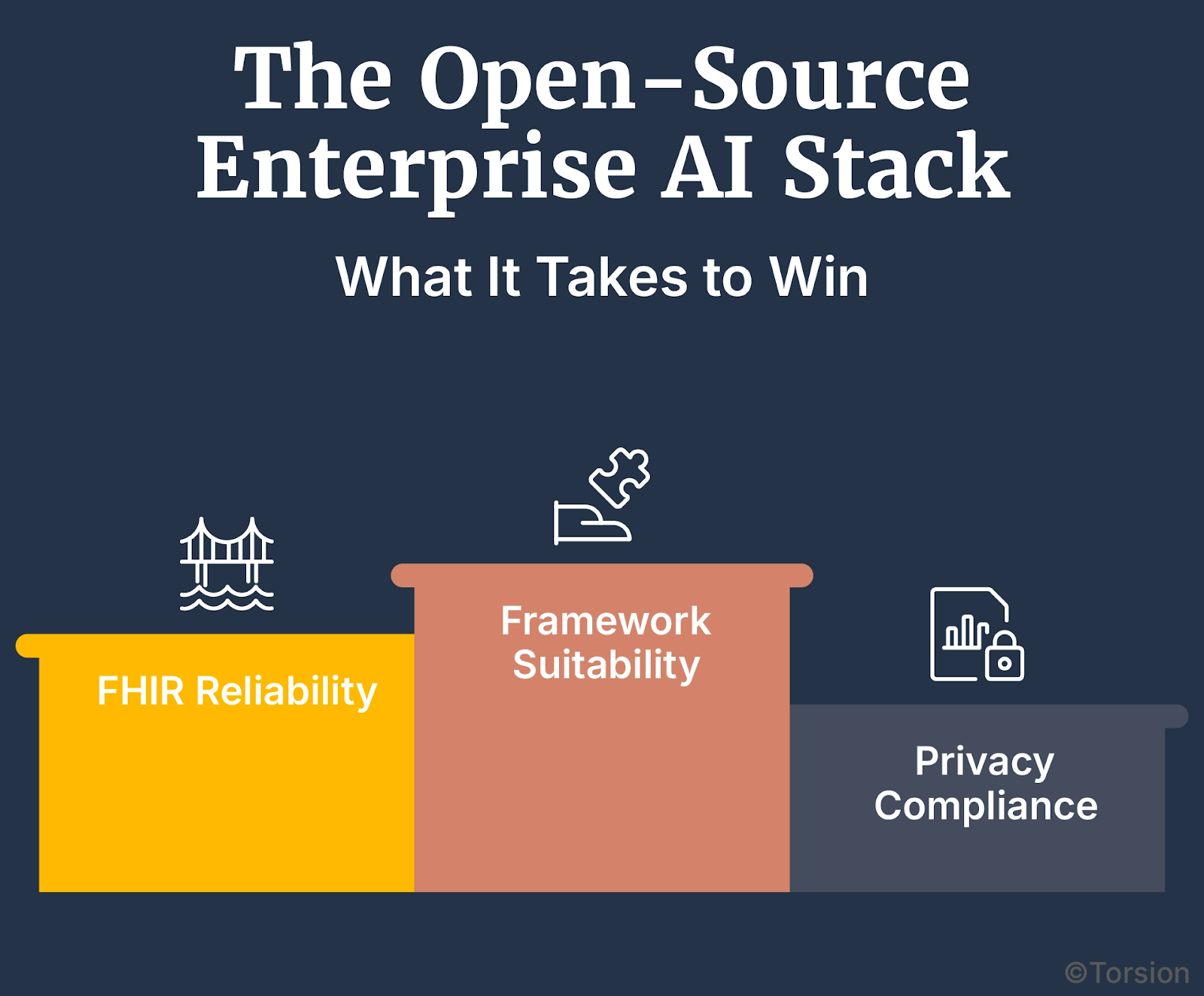

The Open-Source Enterprise AI Stack: What It Takes to Win

If closed systems made this mess, open ones can help clean it up. But this isn’t about dumping tools onto already overwhelmed tech teams. What works now are modular, standards-driven frameworks that plug into real workflows without creating more sprawl.

Here’s what that looks like in practice.

Not All Frameworks Fit the Job

Tools like TensorFlow, PyTorch, and OpenShift AI are common across industries. What makes them valuable in healthcare is how they handle mess.

- You can build custom pipelines to pull structure from clinical notes

- They support de-identification before model training

- They don’t need everything to be perfect or even labeled

In systems where most of the data is still free-text or trapped in attachments, that flexibility matters more than speed.

FHIR Isn’t Flashy, But It Works

FHIR adoption is rising fast, projected to hit 90% of global health systems by next year. But using it well takes more than flipping a switch.

A solid setup usually includes:

- SMART on FHIR for app access

- HAPI FHIR for testing and validation

- Security tools like OAuth2 and role-based controls

Some systems have used this setup to cut data handoff times by nearly half. And when models don’t have to wait for clean input, they actually work.

Privacy Is the Dealbreaker

Enterprise AI only scales if it respects the rules. That’s where federated learning enters the picture.

It lets teams train on sensitive data without moving it. Each system runs a local version of the model, sends back updates, not the data, and the core model learns without exposing anything.

It’s not plug-and-play, but it’s workable. And it’s one of the few ways to collaborate across institutions without triggering legal panic.

This Already Exists

UPMC has been using open frameworks to connect messy systems for years. On the research side, the Massachusetts Open Cloud runs diagnostics on live patient data without centralized access.

They’re not waiting on regulation to figure this out. They’re shipping and seeing fewer delays, cleaner data flow, and more useful model outputs.

The Policy and Ethics Layer: Unlocking Trust and Acceleration

Even the best-built stack falls apart without policy alignment. That’s a legal problem, yes, but also a momentum problem.

What’s finally changing is the policy environment. And when paired with clear ethical guardrails, it’s starting to remove the blockers that used to stall data-sharing initiatives for months, sometimes years.

Federal Rules Are Starting to Force the Issue

Until recently, there wasn’t much pressure to stop hoarding data. That’s changing.

- The 21st Century Cures Act now prohibits unreasonable limits on health information flow

- TEFCA aims to create a national framework for trusted exchange

- CMS’s interoperability rules require standardized APIs for patient access and payer-to-payer data sharing

What used to be a nice-to-have is now showing up in compliance reports. And for organizations still on the fence, that’s turning into action.

Openness Makes the Market Work

Closed systems stall progress. Open data standards, by contrast, let teams move faster, and give smaller players room to compete.

To make this work at scale, the ecosystem needs:

- Publicly maintained FHIR libraries

- Lightweight middleware that doesn’t require full platform rebuilds

- Clear, certifiable ways to prove compliance with open protocols

These aren’t moonshot ideas. They’re practical building blocks, and they reduce the long-term cost of integration across the board.

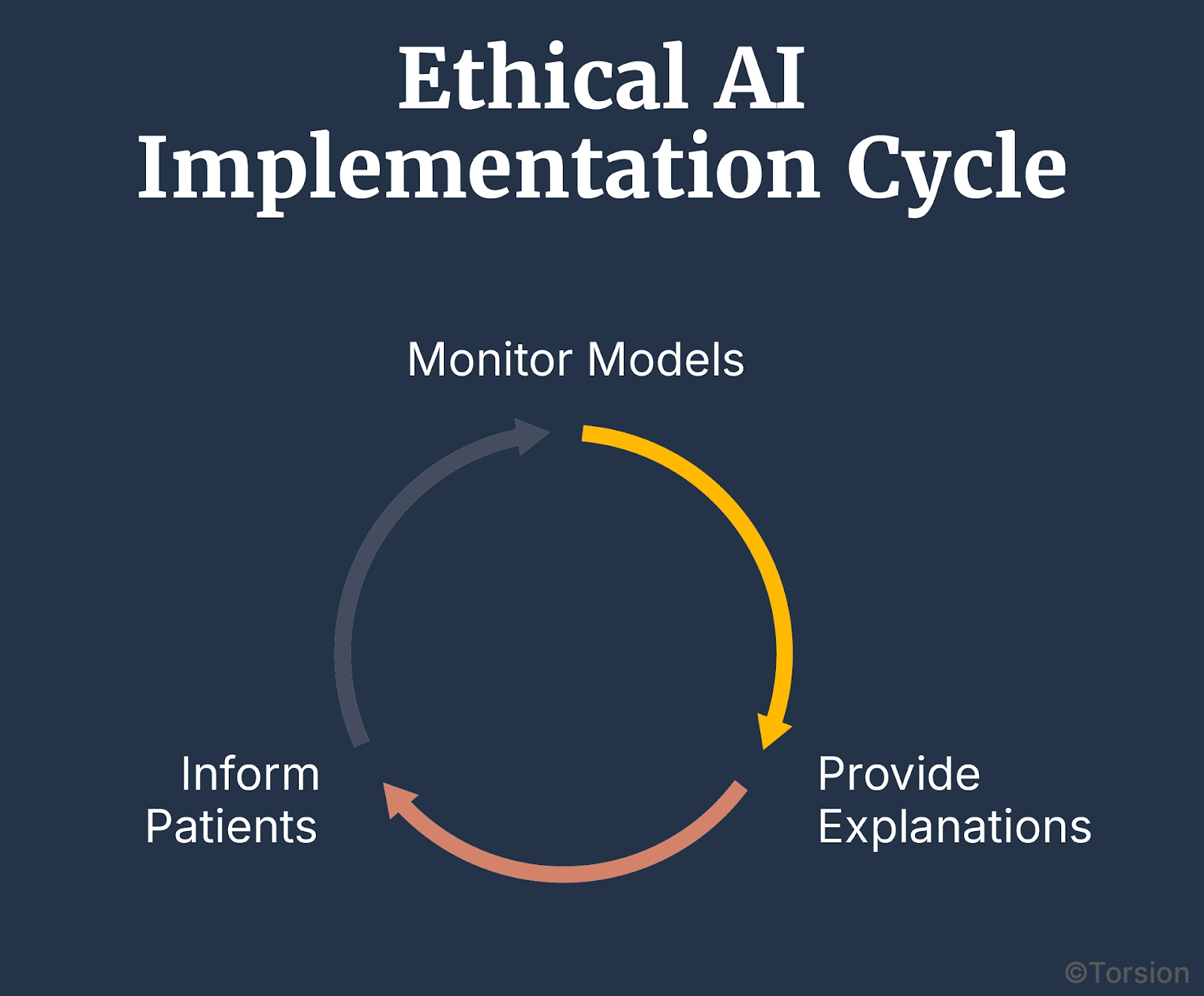

Ethics Can’t Be an Afterthought

Trust doesn’t come from performance, it comes from transparency.

- Teams need tools to monitor models for drift, bias, and blind spots

- Clinicians need explanations that make sense in context, not after-the-fact debug logs

- Patients need to know what’s happening with their data, and why it matters

Some systems are already using SHAP, LIME, and in-model interpretability tools to surface that logic in real time. Others are embedding audits into the deployment pipeline so no one’s guessing after a misfire.

That’s how you turn Enterprise AI from a liability into an asset. You build trust into the system, not around it.

From Patchwork to Platform: The Interoperability Reset

Policy is finally catching up. Open frameworks are in play. The stack is ready. But unless systems start acting operationally, not just aspirationally, healthcare stays stuck in patchwork mode.

This is a coordination failure.

When you add it all up, $30 billion in annual waste, stalled deployments, staff burnout from redundant documentation, the cost of doing nothing becomes a bigger risk.

Especially when open-source Enterprise AI already offers a viable way out.

Interoperability Is a System Flaw, Not a Technical Roadblock

The challenge has never been lack of standards. It’s been a lack of adoption, alignment, and accountability.

With FHIR adoption expected to hit 90% of health systems by 2025, and the healthcare interoperability market projected to quadruple to $24.8 billion by 2035, the infrastructure is on the table. Now it’s a matter of strategy.

What Happens When You Rebuild the Right Way

- You spend less on one-off integrations

- Your teams stop fighting upstream just to get usable data

- Models move out of PoC and into daily use

- CDS tools get sharper, faster, more trusted

- Staff gain back time and trust the system more for it

That’s what smart orgs are already doing with modular, standards-aligned, open-source stacks.

Interoperability won’t fix itself. But it also isn’t out of reach.

Open-source Enterprise AI can cut costs and give healthcare the velocity, transparency, and precision it’s been chasing for years.

The call now is for better leadership:

- If you’re a healthcare exec: make open architecture a strategic priority

- If you shape policy: back standards with clear incentives

- If you build systems: stop solving in silos, solve for scale

The platform is already here. It’s time to stop patching and start rewiring.