Key Takeaways

- GenAI introduces new risks—PHI leakage, hallucinations, and unpredictable outputs.

- Traditional security models are obsolete for LLMs and probabilistic systems.

- Governance must cover the entire Enterprise AI lifecycle, not just app-level access.

- Retrofitting compliance doesn’t work—embed explainability and access controls upfront.

- The new GenAI security stack includes Zero Trust, RBAC, audit trails, and threat modeling.

- Vendor oversight is non-negotiable—look for proof, not promises.

- Mature governance frameworks turn compliance from overhead into infrastructure.

Healthcare payers are deploying Generative AI (GenAI) and Large Language Models (LLMs) at scale—streamlining claims adjudication, appeals, member communication, and documentation. The speed of adoption is driven by tangible efficiency gains, but the underlying security architecture is lagging.

The problem? Traditional security models were designed for static systems with predictable inputs, not probabilistic engines that evolve and adapt. GenAI’s transformative capabilities are matched by its unpredictability, making conventional perimeter-based security architectures obsolete.

The same characteristics that make GenAI indispensable—dynamic learning, contextual inference, emergent reasoning—introduce new attack vectors that legacy systems are ill-equipped to handle. And while the technology advances rapidly, governance frameworks are stagnating.

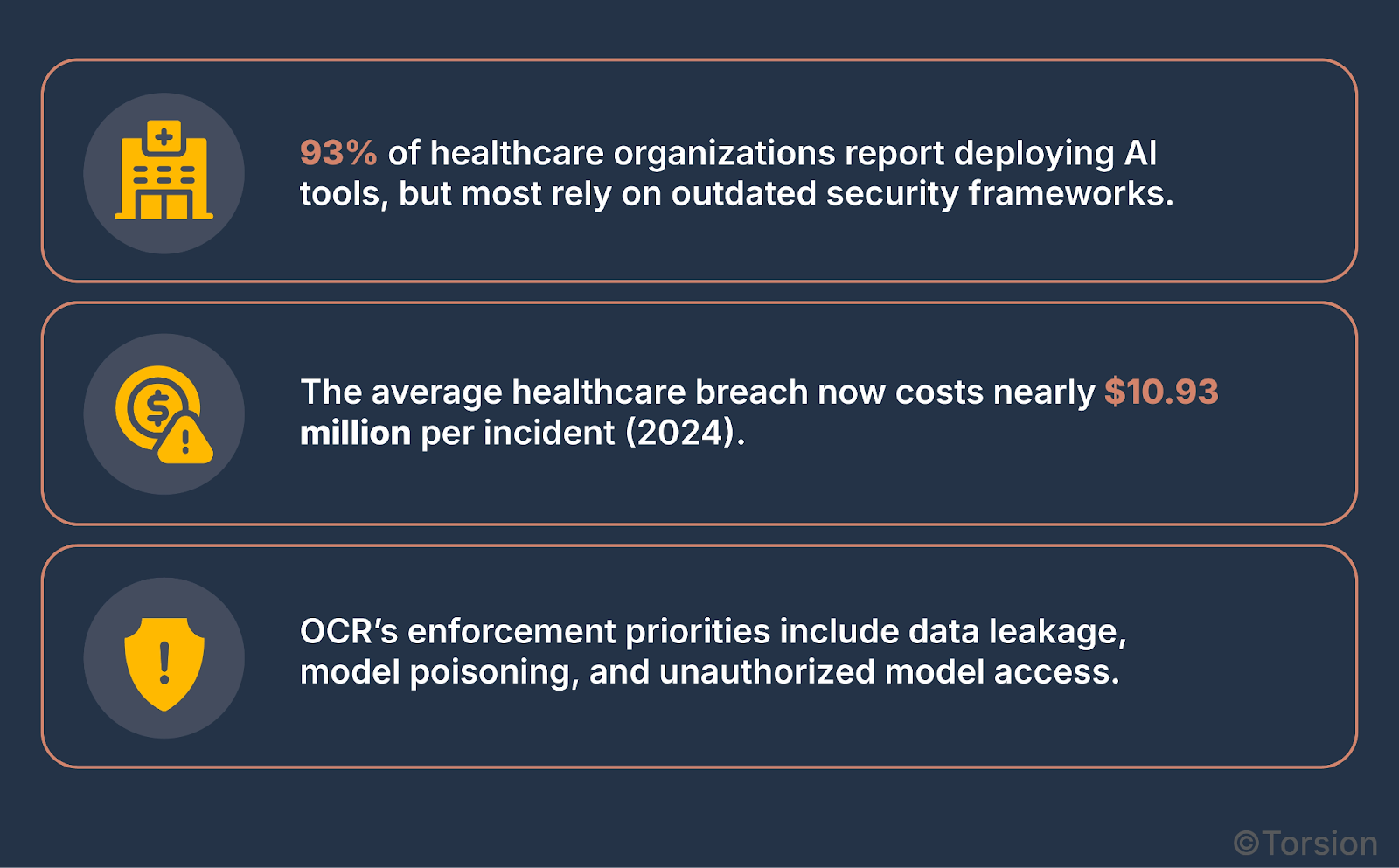

The Real Stakes

Healthcare remains the most breached industry, and GenAI systems are only broadening the attack surface. This isn’t speculative—regulatory bodies like the OCR have made it clear that enforcement will intensify around PHI data leakage, model hallucinations, and training data provenance.

GenAI systems trained on real-world data are now generating unpredictable outputs, making them both a technological asset and a compliance nightmare.

Deploying Enterprise AI systems without robust governance is like deploying a high-frequency trading algorithm without risk management. The risks aren’t just theoretical, they are inevitable.

GenAI Is a Lifecycle Exposure

Traditional perimeter-based security assumes static inputs, known workflows, and predictable outputs. GenAI breaks that model at every level.

You might think that this a system-level vulnerability, but it’s a full lifecycle threat surface. From ingestion and training to inference and retraining, every stage in the GenAI pipeline introduces distinct security, compliance, and governance challenges.

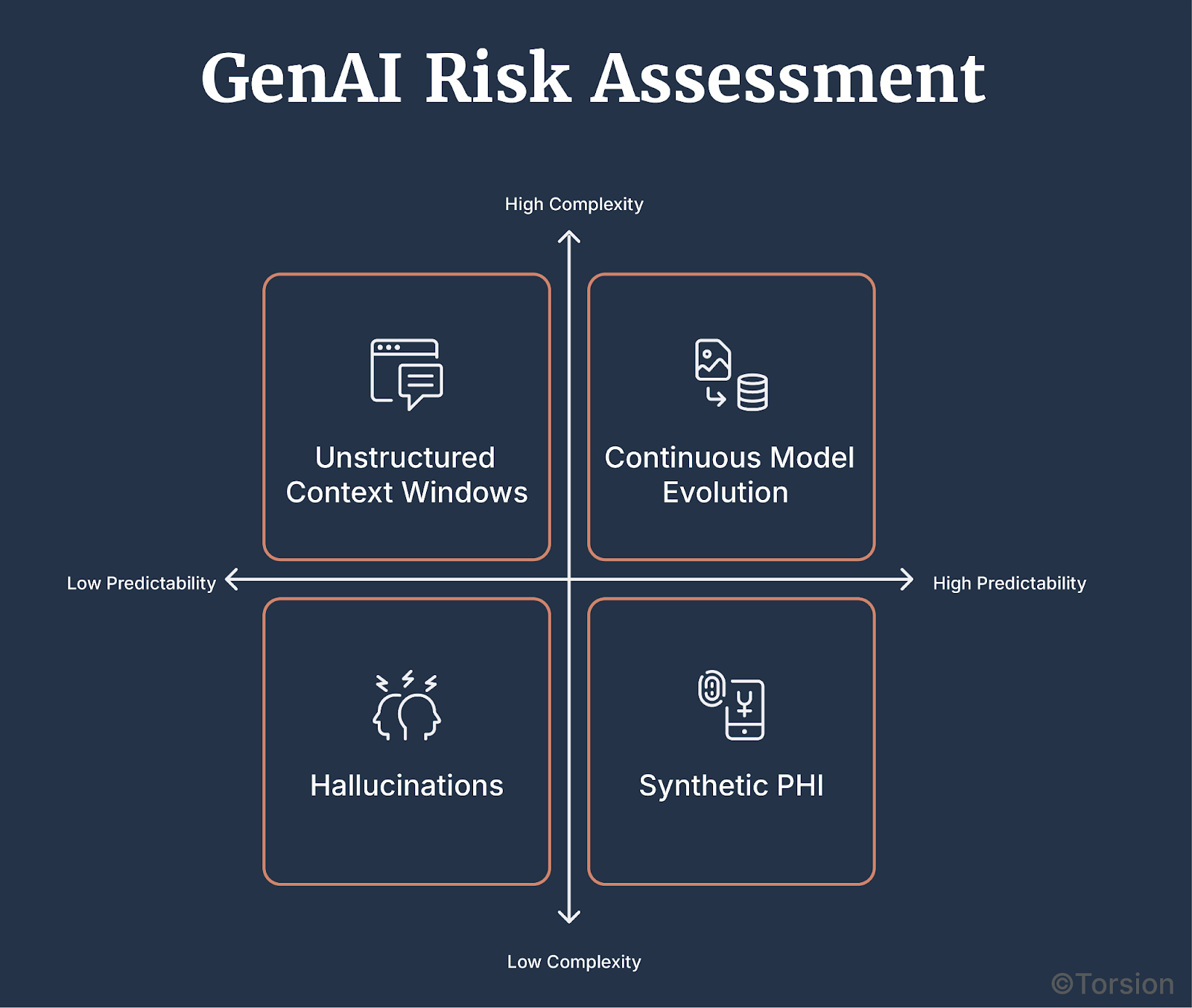

GenAI Disrupts Legacy Assumptions

The architecture of GenAI models creates exposure in ways that security teams weren’t trained to handle:

- Probabilistic Outputs: GenAI doesn’t retrieve, it generates. That means hallucinations, synthetic PHI, and unexpected answers are native behaviors.

- Unstructured Context Windows: Inputs can be anything—clinical notes, appeals, chat logs. Outputs are even less constrained, making monitoring and logging far more complex.

- Nonlinear Learning: Unlike deterministic systems, GenAI models continuously evolve through fine-tuning, reinforcement learning, or user prompts. That adaptability makes threat modeling far more dynamic and risky.

Key Insight: Governance Must Follow the Pipeline

Trying to secure GenAI at the app or interface layer is like installing a vault door on a tent.

Governance needs to follow the entire lifecycle:

- Data ingestion: Is PHI redacted? Are sources documented?

- Training phase: Is model provenance traceable? Is adversarial input tested?

- Inference & deployment: Are outputs logged, explainable, and access-controlled?

- Monitoring & retraining: Can you detect drift or unintentional memorization of PHI?

Treating GenAI like a discrete endpoint rather than an evolving, probabilistic pipeline is a dangerous oversight. That’s why governance must be embedded early and evolve continuously.

Governance Cannot Be an Afterthought

Once GenAI systems are deployed, the cost of bolting on security is steep and the damage is often already done.

Traditional systems allowed for iterative compliance retrofits. GenAI doesn’t.

Its probabilistic nature means it can generate sensitive or misleading content unpredictably. That makes security-by-design non-negotiable.

Why Retrofitting Fails

Most compliance failures with GenAI don’t start with the model. They start with architecture decisions made too late:

- Opaque Vendors: “HIPAA-ready” claims are meaningless without model lineage, training data transparency, and live audit capabilities.

- No Ground Truth Logging: Organizations often realize post-deployment that they lack the logs to explain or trace outputs—a direct OCR violation risk.

- Security-First ≠ AI-First: Enterprise AI teams optimize for iteration speed; security teams optimize for risk reduction. If those incentives aren’t aligned from day one, trust and traceability suffer.

Key Insight: Shift Security Left

GenAI governance must follow a DevSecOps-style model: integrate security, compliance, and audit into every phase of the model pipeline not slap it on after deployment.

That means:

- Start with access control and lineage tracking.

- Define explainability thresholds before launch.

- Design MLOps pipelines with red team input—not just data scientists.

Treating security as a QA step is an artifact of the pre-AI era. For GenAI, governance is not a review gate to its infrastructure.

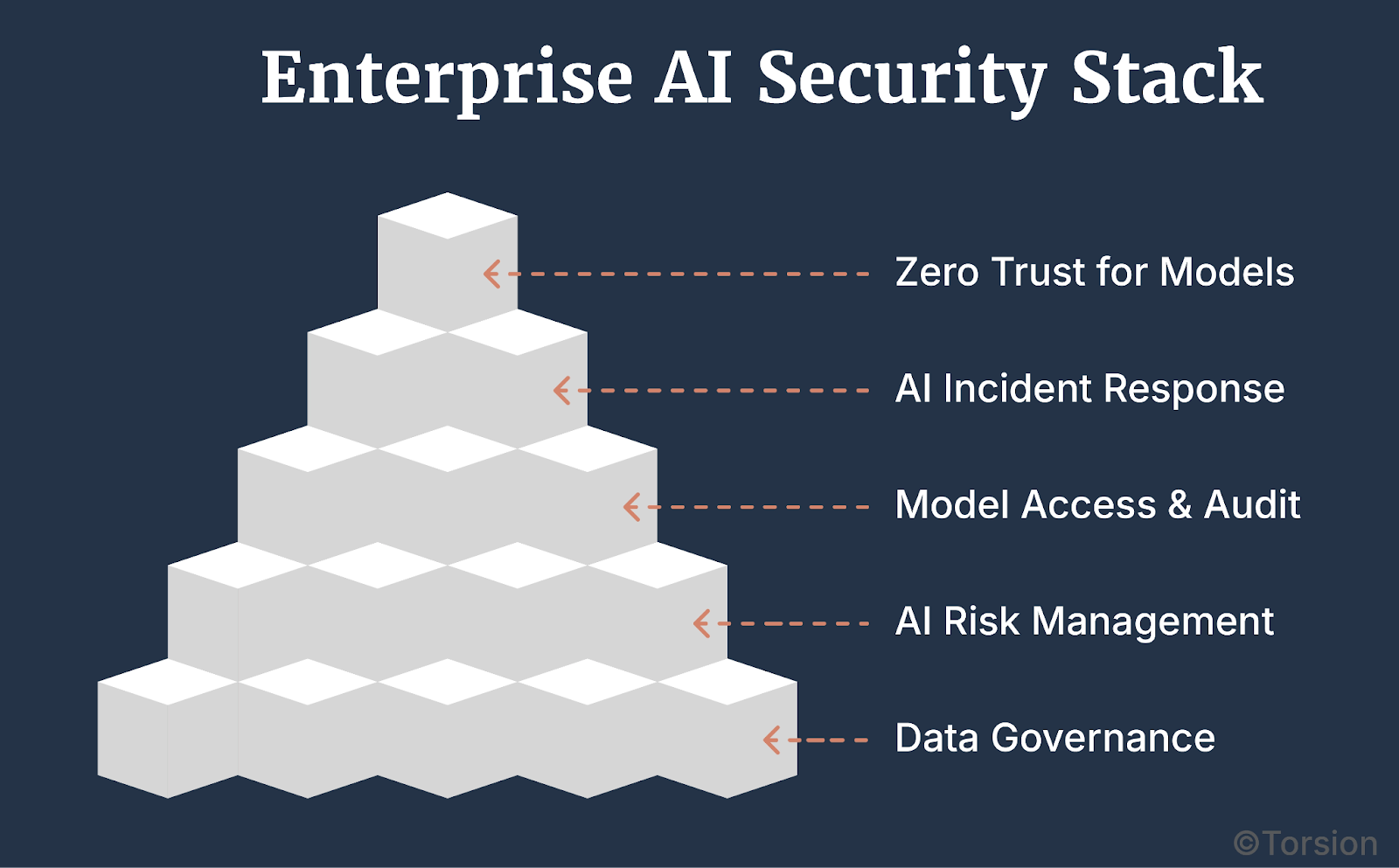

The New Security Stack for Enterprise AI-Driven Payers

Modern GenAI systems can’t be protected by firewalls and encryption alone. Securing them requires a purpose-built security architecture, one that governs not just endpoints, but every phase of the Enterprise AI lifecycle.

It’s time to move from perimeter thinking to model-centric governance. This is the updated Enterprise AI security stack.

A. Data Governance from the Start

Traditional data policies weren’t built for LLMs. They assume control at rest and in transit, not at inference. GenAI demands controls that follow the data into the model’s memory.

Threat Modeling for GenAI

- Account for model-specific risks: prompt injection, output leakage, synthetic PHI.

- Threat modeling isn’t one-time. It evolves with model updates, retraining, and fine-tuning cycles.

Dynamic Redaction & De-ID Pipelines

- Pre-inference PHI scrubbing, not just masking at intake.

- Use AI to classify and strip identifiers before data hits model memory.

- Audit redaction accuracy with synthetic probes.

Synthetic Data for Training

- Replace real-world PHI with statistically equivalent synthetic records.

- Inject differential privacy techniques to prevent memorization and leakage.

B. Enterprise AI Risk Management

Risk management must shift from infrastructure to inference. These aren’t static APIs but reasoning engines.

Model Registries with Version Control

- Every model must have a verifiable origin, training lineage, and permissioned deployment log.

- No shadow models. No undocumented weights.

Explainability Gates

- If a model can’t justify its outputs in plain language or structured metadata, it doesn’t ship.

- Explainability isn’t a bonus, it’s a compliance gate.

Red-Team Simulation for LLMs

- Don’t assume safety, but test for it.

- Simulate drift, bias, and hallucination attacks before production deployment.

C. Model-Level Access and Auditability

Application-layer RBAC isn’t enough. You need fine-grained control at the model level.

Tokenized Access Control

- Issue scoped tokens per role, per task. No shared logins. No open prompts.

Immutable Audit Trails

- Log every query, output, and response token.

- Store lineage metadata alongside outputs for forensic traceability.

Output Scoring and Alerts

- Score inferences for risk: PHI leakage, policy violations, hallucinations.

- Trigger alerts before damage, not after breach reports.

D. AI-Native Incident Response

GenAI systems don’t fail in predictable ways. Incident response must be model-aware.

Custom Playbooks

- Prompt injection? Trigger rollback.

- Hallucination? Flag model version.

- PHI spill? Revoke inference tokens.

Breach Simulation and Response Drills

- Run LLM-specific breach scenarios as part of your SOC drills.

- This isn’t like patching a server—it’s about rewinding a reasoning engine.

E. Zero Trust, Rewritten for Models

Zero Trust isn’t just for networks anymore. It applies to model architecture.

Model Isolation by Risk Profile

- High-sensitivity models (e.g., those handling PHI) get isolated sandboxes.

- No shared inference pools. No cross-context prompts.

Continuous Authentication

- MFA for admins. Session-level validation for model access.

- Integrate risk-based authentication into the inference pipeline itself.

Segmented Model Networks

- Treat LLMs like microservices with firewalls between models.

- A breach in one model shouldn’t expose your entire inference stack.

Vendor Management & Compliance Maturity Model

As healthcare payers accelerate their adoption of Generative AI (GenAI) and Large Language Models (LLMs), managing external partnerships becomes increasingly complex.

Ensuring that these relationships align with rigorous regulatory standards requires a structured Vendor Management Framework and a progressive Compliance Maturity Model.

Without these safeguards, even the most advanced Enterprise AI systems can become compliance liabilities.

A. Vendor Management

Vendors providing GenAI solutions often promise compliance readiness without offering sufficient transparency or traceability.

A robust framework for evaluating, contracting, and monitoring third-party solutions is essential for protecting Protected Health Information (PHI) and ensuring ongoing compliance.

Evaluation Criteria for GenAI Vendors

Data Provenance and Training Sources

- Assess the origins and quality of data utilized by vendors to train their models.

- Ensure data collection methods comply with HIPAA, CMS guidelines, GDPR, and other relevant privacy frameworks.

- Demand transparency regarding the datasets used during training, including lineage tracking and pre-processing techniques.

Explainability Mechanisms

- Require vendors to provide clear methodologies that explain how their models generate outputs.

- Prioritize vendors who can offer model cards detailing architecture, training data sources, purpose, limitations, and intended use cases.

- Ensure explainability thresholds are documented and consistently enforced.

Security Certifications

- Verify that vendors possess recognized security certifications, such as ISO/IEC 27001, SOC 2, or HITRUST.

- These certifications indicate adherence to rigorous information security standards, including access control, encryption, and data integrity.

Business Associate Agreement (BAA) Requirements

Enterprise AI-Specific Provisions

- Incorporate clauses addressing the unique aspects of GenAI systems handling PHI.

- Clearly define responsibilities concerning data lineage, model retraining protocols, access controls, and logging requirements.

- Specify requirements for explainability, auditability, and data protection within Enterprise AI workflows.

Contractual Safeguards

- Establish clear audit rights, allowing periodic reviews of the vendor’s data handling and model operations.

- Mandate compliance with HIPAA, CMS guidelines, and state-specific privacy laws as part of the contractual agreement.

- Require ongoing reporting of changes to the vendor’s infrastructure, security protocols, or compliance posture.

PHI Protection Standards

- Define expectations around PHI handling, encryption standards, access restrictions, and breach notification protocols.

- Ensure vendors provide evidence of compliance readiness, not just assurances of it.

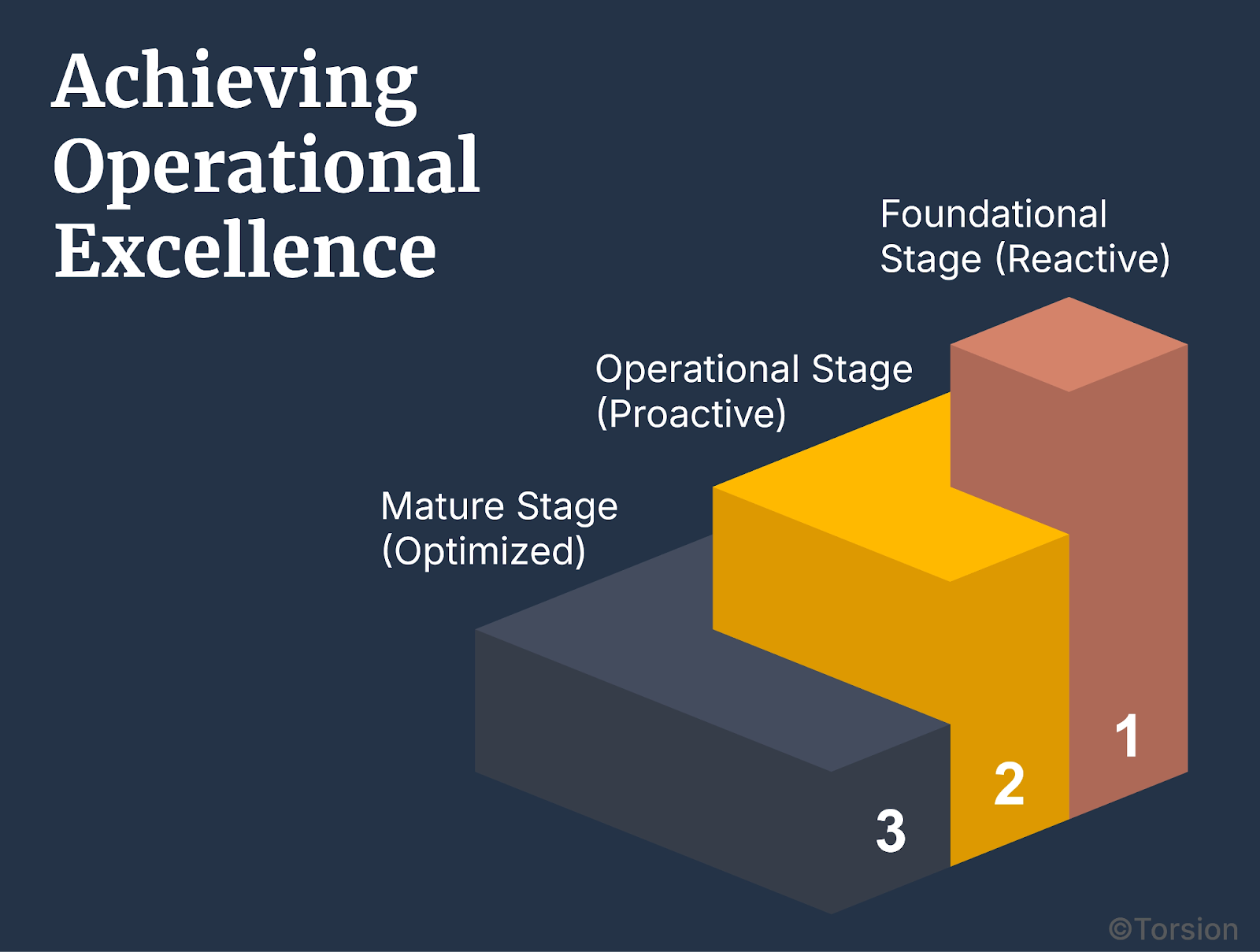

B. Compliance Maturity Model

Effective governance isn’t static; it’s an evolving capability. A Compliance Maturity Model provides a structured approach for progressively enhancing an organization’s security posture.

By advancing through maturity stages, payers can align their Enterprise AI systems with regulatory requirements while simultaneously improving operational resilience.

Assessment Framework

Current State Evaluation

- Assess the organization’s existing posture in areas such as data security, governance structures, model explainability, and audit readiness.

- Identify gaps in compliance and governance that could expose the organization to regulatory penalties or reputational damage.

Staged Implementation Roadmap

Foundational Stage (Reactive)

- Encryption & Access Controls:

- Implement baseline security measures, including robust encryption protocols and stringent access controls.

- Implement baseline security measures, including robust encryption protocols and stringent access controls.

- Baseline Threat Modeling:

- Conduct initial threat assessments to identify potential vulnerabilities across the Enterprise AI lifecycle.

- Conduct initial threat assessments to identify potential vulnerabilities across the Enterprise AI lifecycle.

- Audit Preparation:

- Begin building foundational logging systems to track model interactions and data flow.

- Begin building foundational logging systems to track model interactions and data flow.

Operational Stage (Proactive)

- Comprehensive Audit Logs:

- Maintain detailed logs capturing all interactions with GenAI systems, ensuring traceability and accountability.

- Maintain detailed logs capturing all interactions with GenAI systems, ensuring traceability and accountability.

- Explainability Standards:

- Develop and enforce standards ensuring that all models are interpretable and their decisions are justifiable.

- Develop and enforce standards ensuring that all models are interpretable and their decisions are justifiable.

- Penetration Testing:

- Regularly test systems for vulnerabilities through simulated attacks to strengthen defenses against evolving threats.

- Regularly test systems for vulnerabilities through simulated attacks to strengthen defenses against evolving threats.

- Incident Response Playbooks:

- Create specific playbooks for common GenAI vulnerabilities, including model poisoning, prompt injection, and PHI leakage.

- Create specific playbooks for common GenAI vulnerabilities, including model poisoning, prompt injection, and PHI leakage.

Mature Stage (Optimized)

- Continuous Monitoring:

- Implement systems for ongoing surveillance of Enterprise AI operations, detecting anomalies and deviations in real-time.

- Implement systems for ongoing surveillance of Enterprise AI operations, detecting anomalies and deviations in real-time.

- Automated Breach Detection:

- Utilize AI-driven tools to automatically identify potential security breaches, enabling immediate response actions.

- Utilize AI-driven tools to automatically identify potential security breaches, enabling immediate response actions.

- Integrated MLOps Pipelines:

- Seamlessly incorporate Machine Learning Operations (MLOps) frameworks with existing IT and security infrastructures, promoting efficiency and cohesion.

- Seamlessly incorporate Machine Learning Operations (MLOps) frameworks with existing IT and security infrastructures, promoting efficiency and cohesion.

- Regulatory Engagement:

- Maintain ongoing communication with regulatory bodies to anticipate changes and proactively address compliance challenges.

Key Insight

Progressing through these maturity stages allows healthcare payers to not only comply with current regulations but also to proactively adapt to the rapidly evolving landscape of Enterprise AI technologies.

As GenAI becomes increasingly integrated into healthcare operations, maturity is a necessity.

By implementing a structured Vendor Management Framework and progressing through a defined Compliance Maturity Model, healthcare payers can effectively manage the complexities introduced by GenAI and LLM integrations.

This strategic approach ensures that innovations are leveraged responsibly, with due diligence given to security, compliance, and operational excellence.

Secure GenAI: The Fast Track to Scalable Compliance

Deploying Generative AI (GenAI) and Large Language Models (LLMs) in healthcare isn’t about tinkering with futuristic tech. It’s more about operationalizing systems that drive efficiency, accuracy, and strategic insight.

But as GenAI systems become indispensable, the question is how fast organizations can demonstrate resilience and compliance at scale.

A compliant, secure GenAI architecture is one that lets you innovate without restraint.

From Security Burden to Competitive Advantage

The assumption that security slows innovation is outdated. With the right frameworks, security becomes the engine that accelerates GenAI adoption, delivering speed, compliance, and operational efficiency as a unified package.

1. Enhanced Breach Detection and Response

Reactive systems are relics. Healthcare payers need a proactive security architecture that anticipates breaches before they manifest. GenAI introduces complex, evolving attack surfaces that demand real-time monitoring and anomaly detection.

- Active Surveillance: AI-specific monitoring systems that identify and neutralize anomalies before they escalate.

- Predictive Alerts: Anomaly detection algorithms tailored to LLMs and GenAI workflows—capable of identifying drift, unauthorized access, or malicious inputs.

- Rapid Containment: Automated protocols for immediate rollback and remediation, preventing operational paralysis.

Operational Integrity isn’t just about preventing breaches—it’s about ensuring uptime when threats are detected.

2. Audit-Ready Logging

Audits are a fact of life. Preparing for them shouldn’t be.

Comprehensive, immutable logs that capture every model interaction, decision, and inference provide more than a paper trail. They create transparency and defensibility.

- Seamless Traceability: Continuous logging of all interactions with GenAI systems ensures complete audit readiness.

- Immutability by Design: Secure logging mechanisms that are tamper-proof, timestamped, and immutable—providing verifiable evidence of compliance.

- Accelerated Audits: Demonstrating adherence to HIPAA, CMS, and state-specific regulations without the traditional operational slowdown.

3. Automated Incident Response

Manual processes can’t keep up with evolving threats. Leveraging automated incident response protocols is essential to neutralize threats at machine speed.

- Zero Latency Remediation: Automated rollback mechanisms that instantly revert compromised models to safe states.

- AI-Driven Diagnostics: Continuous monitoring systems that differentiate between genuine threats and false positives with high precision.

- Integrated Recovery Playbooks: Tailored frameworks for handling unique GenAI risks—model poisoning, prompt injection, PHI leakage, and hallucination events.

Strategic ROI: When Compliance Drives Growth

Embedding compliance into your GenAI systems isn’t a hindrance—it’s a multiplier. Secure, compliant systems pay dividends in speed, innovation, and credibility.

1. Reduced Legal Review Time

Building systems with compliance built-in reduces the drag of legal reviews and risk assessments.

- Pre-Configured Audit Templates: Frameworks that streamline legal and compliance reviews through established best practices.

- Regulator Confidence: Systems built on transparent, explainable architecture gain trust from auditors, reducing scrutiny and speeding up assessments.

2. How to Achieve HIPAA Compliance with Generative AI

Compliance roadblocks are typically the result of poor planning, not regulatory overreach. By embedding compliance frameworks into the GenAI pipeline, deployments move faster and with greater precision.

- Integrated MLOps Pipelines: Compliance checkpoints seamlessly woven into development, deployment, and monitoring stages.

- Proactive Governance: Ensuring alignment with HIPAA, CMS, and other regulatory bodies before issues arise.

3. Enhanced Regulator Trust

Compliance isn’t just about meeting standards—it’s about proving you’ve built systems that exceed them.

- Predictive Compliance: Continuous monitoring that evolves with new guidelines and evolving threats.

- Credibility through Transparency: Offering regulators a clear view into model architectures, lineage tracking, and PHI handling.

- Regulatory Alignment as Strategy: Leveraging a proven compliance architecture to strengthen relationships with auditors and regulators alike.

The Playbook for Building Resilient GenAI Systems

Scaling GenAI requires governance frameworks that are purpose-built for adaptability. Governance that works when everything is stable isn’t governance—it’s complacency.

1. Integrate Compliance Gates in MLOps Pipelines

- Embed compliance checkpoints throughout the Machine Learning Operations (MLOps) lifecycle.

- Implement real-time compliance validation to reduce operational friction and improve deployment speed.

2. Implement Continuous Monitoring

- Deploy real-time surveillance systems to detect unauthorized actions, drift events, and model degradation.

- Monitor continuously, adapting to evolving threats before they compromise the system.

3. Develop Live Security Dashboards

- Use dynamic dashboards that consolidate security metrics, compliance status, and threat insights into a single, actionable interface.

- Provide visibility into your GenAI environment—enabling rapid, informed decision-making.

Key Insight: Governance as Competitive Advantage

The most resilient systems are those that anticipate change. Regulatory guidelines aren’t static, and neither is your infrastructure. Embedding compliance into every layer of your GenAI operations is more than a best practice, it’s a competitive edge.

True compliance isn’t defensive. It’s a proactive, strategic asset that accelerates innovation. The healthcare payers who internalize this will be the ones who lead, while others struggle to retrofit security onto architectures built to fail.

Governance as Competitive Infrastructure

The organizations that thrive in the GenAI era will be those that recognize governance as infrastructure, not overhead. The goal isn’t to balance speed with compliance, it’s to build compliance mechanisms that accelerate speed.

Incorporating governance at every layer of the GenAI lifecycle allows enterprise payers to innovate rapidly, maintain regulatory alignment, and scale responsibly.

The healthcare payers that succeed will be those who see governance not as a barrier but as the foundation of sustainable growth.

Know more at https://torsion.ai/contact-us/