Key Takeaways

- Burnout is a workflow failure, not a wellness issue.

- Administrative drag and decision fatigue stem from data system gaps.

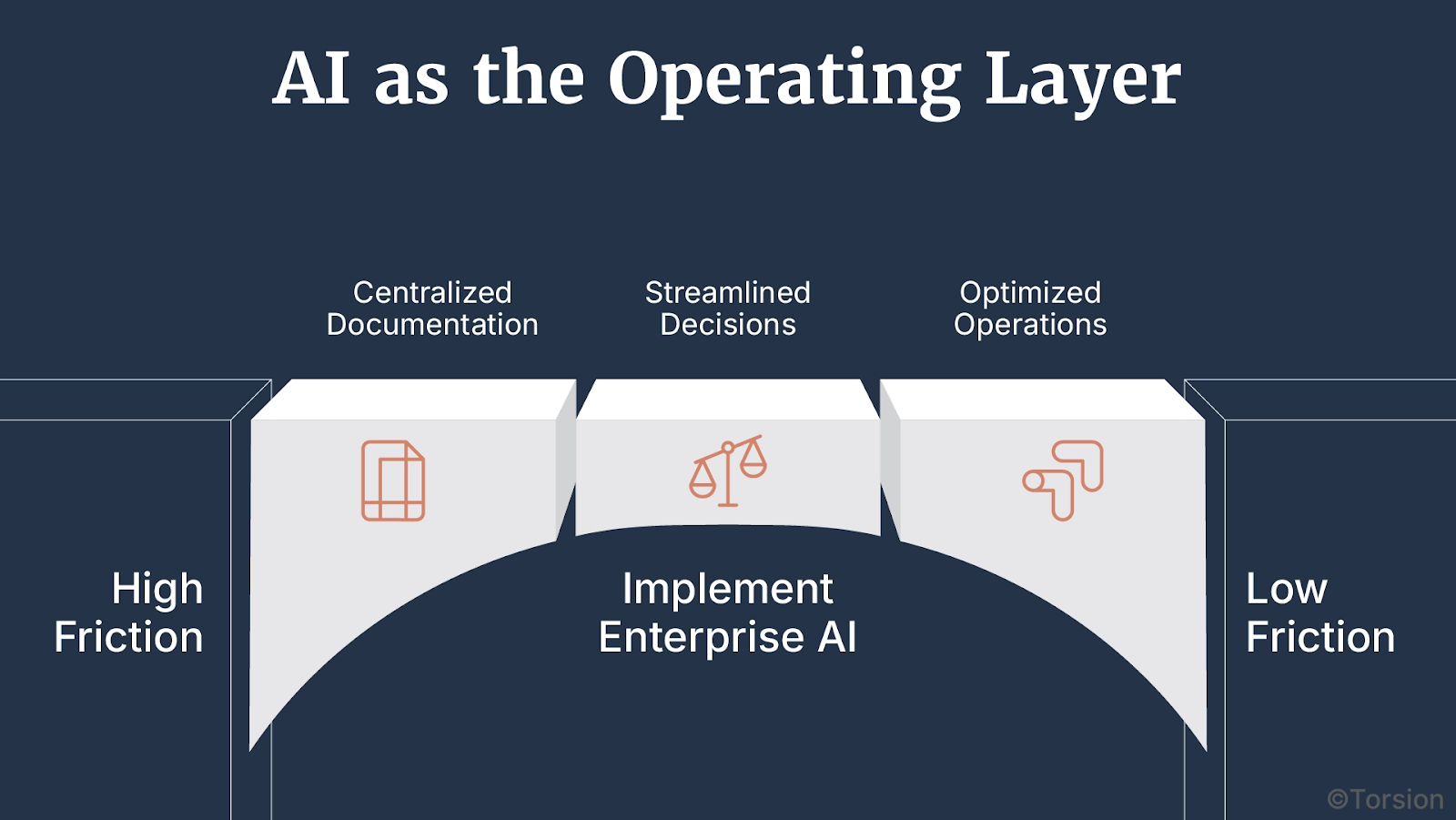

- Enterprise AI should act as an embedded operating layer, not a tool.

- Three high-friction zones: documentation, decision-making, and operations.

- Trustworthy models require governed data, MLOps, and clinician-aligned design.

- Point solutions won’t scale, adaptive ecosystems are the next horizon.

- Leadership must treat workflow reengineering as a strategic mandate.

Burnout Isn’t a People Problem. It’s an Architecture Failure.

Let’s cut the noise: clinician burnout isn’t about mindfulness. It’s not about resilience. It’s not even about staffing shortages.

It’s about system design.

Healthcare’s operational core is broken. We’ve asked clinicians to do more with less, navigate siloed data, click through labyrinthine EHRs, and make life-or-death decisions while swimming in noise. Burnout isn’t the result, it’s THE red flag.

And the cost? Massive. Health systems are leaking over $260M a year in turnover alone. Add malpractice exposure and plummeting HCAHPS scores, and burnout becomes more than a wellness issue, it’s an enterprise risk with balance-sheet consequences.

Behind every exhausted provider is a workflow failure disguised as productivity. When decision-making becomes firefighting, and documentation happens after hours, you don’t have a staffing crisis.

You have an infrastructure flaw that’s scaling inefficiency.

This isn’t a call for better scheduling. It’s a call to rethink how clinical work actually works, how decisions are made, how data moves, and how environments respond under pressure.

At Torsion, we don’t patch problems. We treat clinician burnout as a signal, as evidence that workflows are overloaded, data systems are misaligned, and operational logic isn’t built for the realities of modern care.

So where exactly is the drag? Across the front lines, three friction zones show up again and again:

- Documentation Drain

- Decision Density

- Operational Entropy

These are high-risk, high-cost choke points that undermine care, safety, and retention. And they’re fixable, if we treat them like system-level design problems, not UX annoyances.

Enterprise AI Isn’t a Point Solution. It’s the Operating Layer.

Tech pilots are everywhere. Strategy? Not so much.

You can walk into most hospitals today and find Enterprise AI in the wild, a voice bot in the ED, a predictive model in the ICU, a chatbot buried in a patient portal. But these are tiles. Not a floor.

They don’t reduce friction because they don’t talk to each other. They treat tasks, not systems. And when clinicians are still toggling between five screens and deciphering cryptic alerts, the net result is digital whiplash.

Enterprise AI, when properly embedded, becomes one with the workflow.

That means orchestrating decisions, streamlining documentation, and anticipating bottlenecks in real time instead of retrofitting a new tool every fiscal cycle.

Torsion’s approach is grounded in adaptive intelligence architecture: not one-size-fits-all, but modular systems that live inside your EHR, clinical ops, and care pathways. Systems that reduce drag, distribute cognitive load, and actually shift the tempo of care.

So, where’s the friction worst? Let’s break down the three hot zones slowing every health system down.

Friction Zone 1: Documentation Drain

LLMs can pass a board exam, but can they survive a double shift in the ED?

Documentation is still the number one burnout accelerator. In some systems, clinicians spend 4 hours extra daily to complete all required clinical duties, including documentation. That’s operational debt.

Tech in Action

- Ambient scribes are now reducing documentation time by 30–40% in frontline care.

- Prior authorization bots cut turnaround by 30% for payer workflows.

- Code flagging tools improve billing accuracy by 20%, boosting downstream revenue.

Leadership Insight

Don’t treat notes like text. Treat them like data. We embed LLMs inside the clinical stack, validate outputs against structured sources, and flag risk in real time. The outcome? Semantic fidelity at the point of care, and notes that don’t need a second shift to clean up.

If documentation still feels like admin work, your system isn’t working. The real win isn’t faster clicks but reusable data that builds operational memory.

Friction Zone 2: Decision Density

Every shift feels like drinking from a firehose. 70+ alerts. Dozens of unread CDS prompts. Just to stay current with clinical literature.

Tech in Action

- Predictive CDS is live in 40% of U.S. hospitals, with big wins in sepsis, fall risk, and discharge readiness.

- Imaging triage models cut turnaround times by 25%, unclogging radiology pipelines.

- Disease progression models are pushing earlier interventions in chronic care.

Leadership Insight

If your clinical decision support system can’t explain its “why,” it’s not support, but noise. Torsion helps health systems implement explainable CDS pipelines, complete with model scaffolding and local context tuning. No black boxes. Just transparent, auditable logic clinicians can trust.

Trust is the payload. If a doctor second-guesses the Enterprise AI, you’ve doubled the cognitive load.

Friction Zone 3: Operational Entropy

OR blocks in Excel. Bed assignments on whiteboards. Verbal huddles at shift change. The system still runs like it’s 1999 but with a billion-dollar budget.

Manual workflows aren’t quaint. They’re dangerous.

Tech in Action

- Patient flow simulators now optimize real-time transfers in 15+ major hospitals.

- AI-driven rostering reduced scheduling conflicts by 35%, cutting staffing strain.

- Load-balancing engines match beds to real-time acuity and census data, improving throughput.

Leadership Insight

Dashboards are diagnostics. What systems need are orchestration layers—models that act, adapt, and balance operations across shifts. We implement multi-modal Enterprise AI to coordinate staffing, flow, and escalation dynamically.

Static ops dashboards are rearview mirrors. The future is systems that respond in real time before delays become failures.

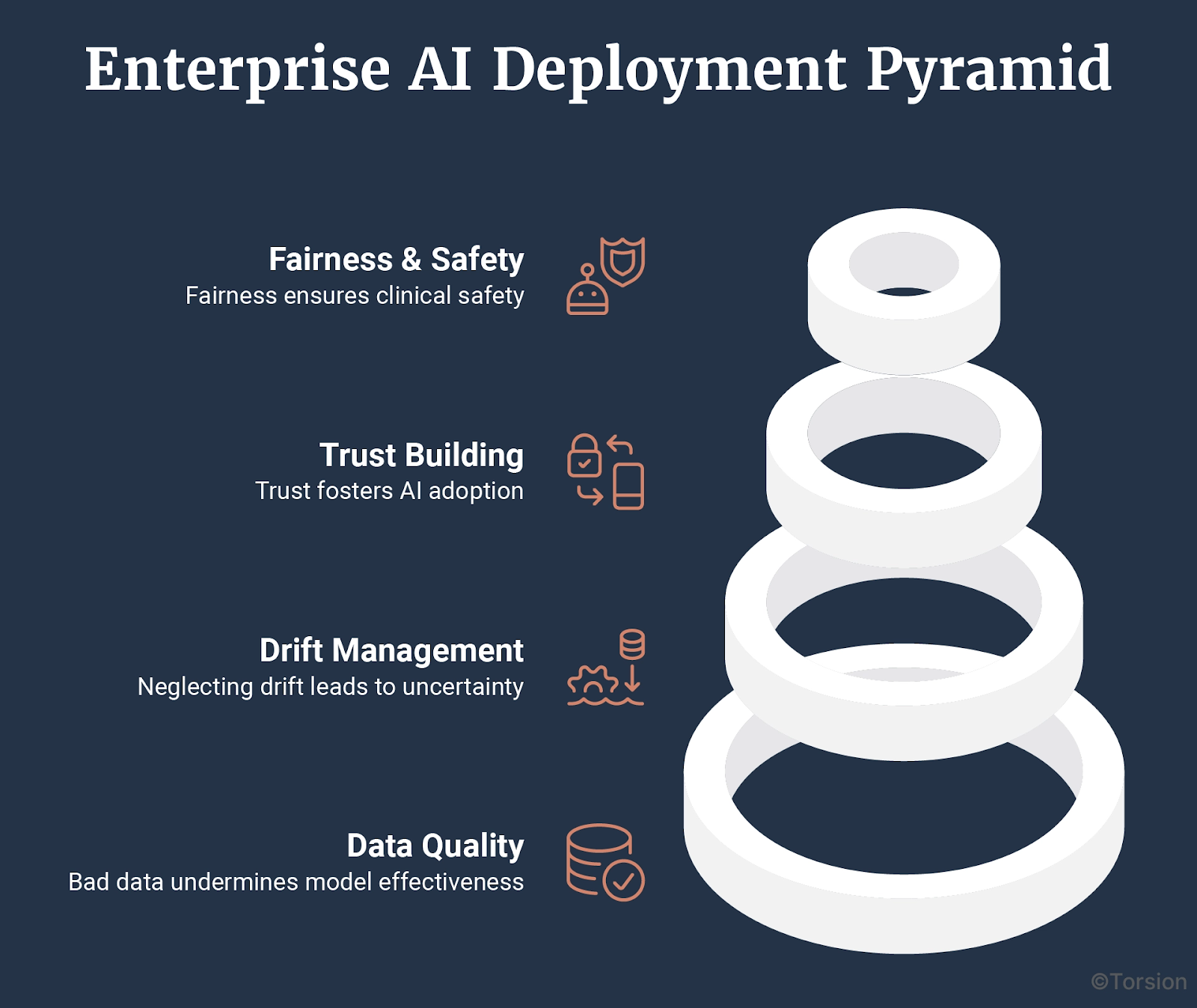

Infrastructure, Drift, and Trust: The Missing Pieces in Enterprise AI Deployment

Usually, great models don’t fail in R&D. They fail in the field and for reasons no one put in the demo.

The issue isn’t prediction accuracy. It’s everything else: fragmented data, fragile infrastructure, workflows that weren’t built to absorb real-time intelligence.

These are the friction points that turn promising pilots into shelfware.

Enterprise AI doesn’t scale because of model quality. It scales when the system around it is ready.

Here’s what that readiness actually looks like.

A. Bad Data Breaks Good Models

Most clinical data isn’t model-ready. It’s incomplete, inconsistently structured, and siloed across systems that don’t talk to each other. One recent analysis found that the accuracy of healthcare data ranged from 67 – 100% which undercuts everything from patient safety to predictive reliability.

Leadership Insight

There’s no work-around for bad inputs. Data harmonization, across EHRs, labs, imaging, and claims, has to come first. Otherwise, the model is just guessing with confidence.

If your team is cleaning up data after deployment, the real failure happened upstream.

B. If You’re Not Managing Drift, You’re Flying Blind

Clinical environments evolve constantly. Guidelines change. Coding practices shift. Populations move. And unless predictive systems evolve with them, accuracy decays, quietly and dangerously.

Leadership Insight

Model management means continuous input monitoring, versioning, rollback plans, and retraining cycles that keep pace with clinical reality. Drift is normal. Ignoring it isn’t.

If no one knows what version was live last week, your models are exposed.

C. Trust Drives Adoption

Accurate models can still fail if no one uses them. And that happens a lot because adoption isn’t about trust in the math. It’s about trust in the workflow.

If the logic is opaque, if the outputs feel tacked on, or if the prediction adds more work? Clinicians will skip it. And they should.

Leadership Insight

Adoption climbs when outputs are clear, explainable, and embedded inside the tools teams already use, not floating in a separate dashboard, or buried behind clicks. That’s why, burnout isn’t always about workload. Sometimes it’s about decision fatigue created by poorly integrated systems.

D. Fairness Is Part of Clinical Safety

Bias is a research concern and a design flaw rolled into one.

In one widely used commercial model, Black patients were flagged 40% less often for extra care because the model used historical costs to estimate need. And historically, those costs were lower.

This wasn’t a bug, but a blind spot.

Leadership Insight

Fairness needs to be treated like any other performance metric. That means testing across race, gender, and language, then monitoring how those patterns evolve post-deployment.

If your models scale inequity, you’re essentially automating risk.

Enterprise Feedback Architecture: Designing for Intelligence, Not Just Automation

Most healthcare systems using Enterprise AI today are running open-loop: A model makes a prediction. A human reacts. The outcome disappears.

There’s no reinforcement, no feedback, no loop.

That’s why progress stalls after the pilot. Not because the model didn’t work, but because the system didn’t learn.

What’s next isn’t more automation. It’s systems that listen, adapt, and reconfigure in real time.

That’s Enterprise Feedback Architecture, and it’s what separates experimental deployments from real operational intelligence.

From Prediction Outputs to Learning Surfaces

We’ve seen where models plug in: ambient scribing, risk scores, imaging triage. But where do they listen?

A learning system doesn’t just make predictions. It uses the surface area of daily operations, like staff behavior, outcome variation, and throughput friction to refine how those predictions are made and used.

- Discharge readiness models recalibrated by post-acute outcomes

- Triage algorithms that adapt to local case mix and guideline shifts

- Rostering tools that reshape coverage based on real-time workload and availability

- Prior auth systems that learn from payer behavior, not rulesets alone

In each case, the intelligence isn’t in the algorithm. It’s in the loop closure.

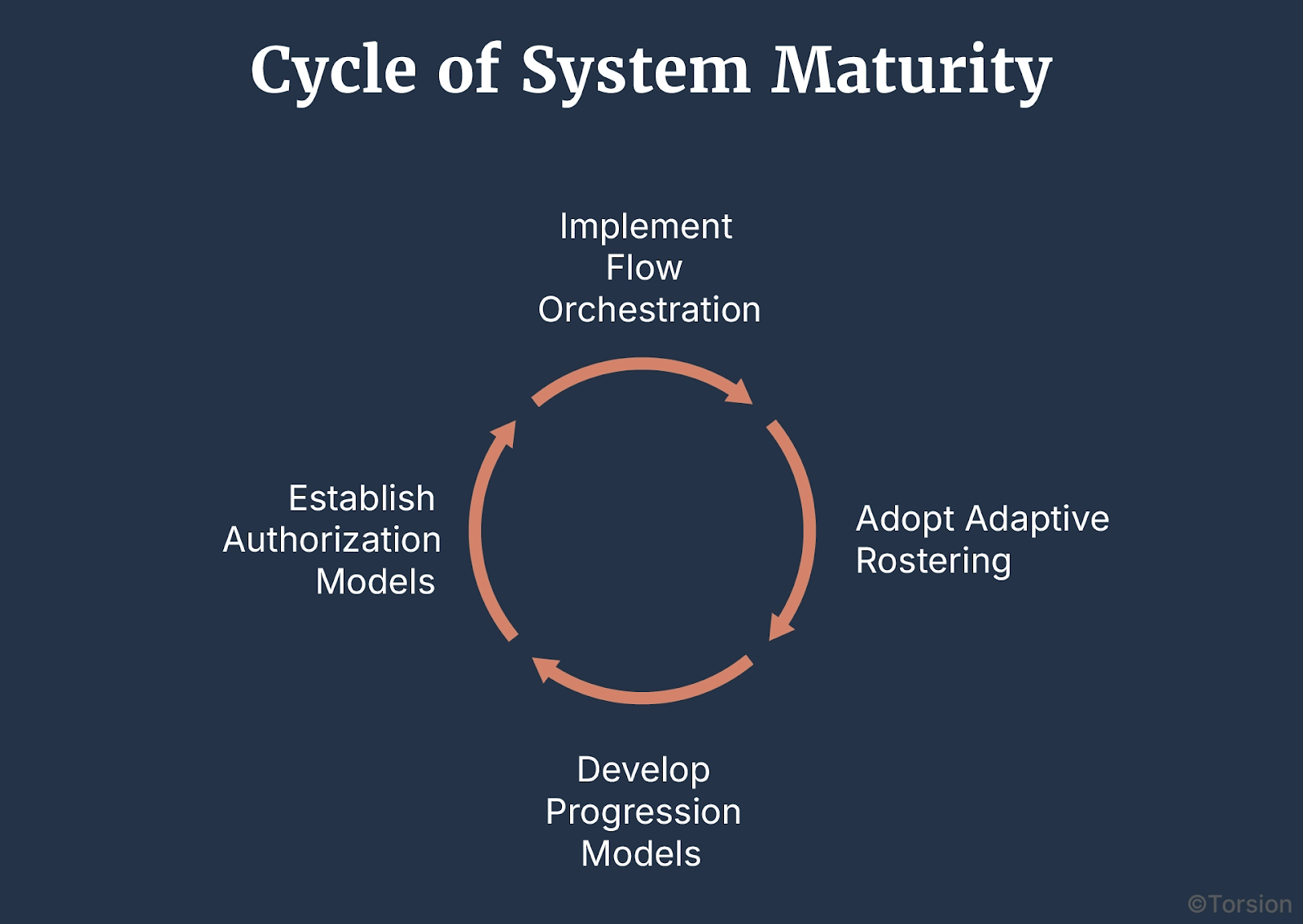

Signals of Maturity: Closed-Loop Systems in the Wild

You can already see hints of this shift:

- Flow orchestration engines live in 15+ hospitals that optimize admissions and transfers dynamically. Not with fixed rules, but adaptive logic

- Adaptive rostering platforms are cutting schedule conflicts by 35%, continuously tuning staff deployment

- Progression models for sepsis and heart failure are improving interventions because they’re retrained regularly on local data

- Authorization models are reducing turnaround not by speed alone, but by adjusting to feedback from payers

These aren’t “models at work.” They’re models in dialogue with their environment.

The Maturity Curve: Intelligence Density × Loop Fidelity

You don’t scale Enterprise AI by stacking use cases. You scale it by tightening the feedback loops between them.

Three dimensions define maturity:

- Loop density – how many points in the system feed intelligence back into model behavior

- Loop fidelity – how accurately and safely those signals are captured, interpreted, and acted on

- Loop trust – how clinicians and operators engage with the system’s learning process

Most orgs today operate at low density, low fidelity, and low trust. That’s an architecture gap.

Designing for Responsiveness

Closed-loop Enterprise AI in healthcare isn’t a moonshot, but a design choice.

It requires:

- Data flows that persist after the point of care

- Interfaces that capture feedback without burden

- Governance that allows model evolution without downtime

- Operational tolerance for learning systems—not just fixed workflows

In other words: not just a model in the loop. A system built around it.

Enterprise AI may succeed because it predicts well. But that success comes about because it learns fast, adapts safely, and improves continuously.

The Real Work Starts After the Pilot

Enterprise AI doesn’t fail because models are weak. It fails when organizations treat it like a feature drop, not a structural shift.

Leadership often stalls at the proof-of-concept stage: one model, one function, one workflow. But that’s not transformation. That’s tooling.

The real shift is operational.

It’s about redesigning how decisions are made, how workflows are shaped, and how systems adapt under pressure. And that takes more than model deployment.

It takes institutional muscle.

Strategy, Not Spend

Yes, implementation is hard. Yes, change meets resistance. Yes, cost, privacy, and trust are real constraints.

But those aren’t reasons to pause. They’re reasons to lead.

Every delayed redesign carries opportunity cost:

- Turnover continues

- Infrastructure calcifies

- Technical debt mounts in the background

Enterprise AI won’t fix broken systems. But it does expose where they’re brittle. And that’s exactly what makes it a leadership tool, not just a technical one.

Designing for the Long Game

The next phase of Enterprise AI isn’t about adoption. It’s about integration.

And not just integration into workflows, but into culture, incentives, and decision logic.

That means:

- Architecting feedback loops that outlive the model cycle

- Funding data infrastructure like clinical infrastructure

- Aligning governance to iteration, not obstruction

- Measuring ROI in throughput, trust, and time, not just model performance

This is the work that won’t show up in a demo. But it’s the only kind that scales.

More and more healthcare systems will adopt Enterprise AI. The differentiator will be who redesigns themselves around it.

That’s the leadership question now: Are you buying capability or building adaptability?