Key Takeaways

- Governance is the backbone of effective AI fraud detection.

- Graph analytics uncovers fraud networks others miss.

- NLP and LLMs catch forged documents with context-aware precision.

- Measure what matters: false positives, recovery rates, and investigation speed.

- Integrated AI workflows cut investigation time and boost accuracy.

- Explainability and auditability aren’t optional—they’re required.

- Healthcare payers must solve for fragmented data, strict compliance, and legacy tech.

Most healthcare payers aren’t short on models. They’re short on control.

Predictive fraud scoring, document analysis with LLMs, even anomaly detection pipelines — they’re all in play. But ask any enterprise team what’s actually working, and the answer gets murky.

False positives are climbing. Investigators are overwhelmed. And worse—fraud networks are getting smarter, learning how to game the very systems designed to catch them.

The root issue? Enterprise AI is being deployed without governance as infrastructure.

And when governance is an afterthought, your models don’t just underperform—they introduce new risks. From undetected collusion to opaque outputs that don’t stand up to audits, unmanaged systems become silent liabilities.

This isn’t about building better algorithms. It’s about operationalizing AI systems that are explainable, adaptive, and integrated across the fraud lifecycle.

That’s where payer orgs are starting to separate themselves.

Recent Research About Enterprise AI in Healthcare

In a recent enterprise AI audit, only 12% of organizations reported having a formal risk management framework. Over 90% lacked governance protocols for third-party models.

While this data comes from financial services, the implications for healthcare are more severe. Payer organizations are now embedding LLMs, anomaly detection models, and predictive scoring systems into claims and audit pipelines—with limited oversight and no lifecycle governance.

The result? High-variance model outputs, regulatory exposure, and fractured accountability across fraud operations.

Privacy concerns (74%) and security risks (71%) continue to top the list of executive worries—not because of theoretical threats, but because current systems lack the guardrails to evolve responsibly.

This is the paradox: the more sophisticated the system, the greater the risk if left unmanaged.

Without embedded governance—explainability layers, audit trails, model usage policies—Enterprise AI doesn’t reduce risk. It repackages it in code.

What is the Core Problem?

The core issue isn’t the model. It’s the lack of a governed system to contain it.

Without lifecycle governance, Enterprise AI behaves like a prediction engine without context—optimizing for the wrong outcomes, amplifying edge cases, and violating compliance boundaries without knowing it.

False positives rise. Pattern drift goes unnoticed. Model decisions can’t be explained or audited.

But governance isn’t overhead—it’s the architecture that makes Enterprise AI trustworthy, scalable, and defensible.

When compliance checks, explainability layers, and feedback loops are baked into the system from day one, models learn, adapt, and hold up to scrutiny, instead of just detecting.

In the sections ahead, we’ll break down how to embed these principles across predictive models, graph analytics, and LLM-driven workflows—turning fraud detection into a proactive, continuous intelligence operation.

Network Analysis Breakthrough: Why Coordinated Fraud Demands a New Detection Playbook

1. The Structural Shift: From One-Offs to Orchestrated Schemes

Healthcare fraud isn’t about isolated claims anymore—it’s engineered, networked, and designed to exploit systemic blind spots.

Modern fraud rings coordinate across providers, patients, and billing systems—splitting transactions, laundering identities, and operating just below detection thresholds.

Traditional detection systems—built around static rules and individual anomalies—aren’t just ineffective; they’re obsolete. They’re optimized for the fraud of five years ago.

2. Graph Analytics + Anomaly Detection: Your New Core Stack

Enterprise AI systems built for today’s threat landscape rely on graph-based analysis and adaptive anomaly models.

- Graph analytics maps relationships across vast data sets—connecting providers, claims, referral chains, and billing events in real time. It surfaces collusion that’s invisible to linear, rules-based systems.

- Anomaly models detect behavioral deviations as they happen—learning from new fraud patterns without waiting for a rules update.

Together, they shift fraud detection from static alerts to dynamic network intelligence.

3. Why Network Intelligence Is No Longer Optional

Predictive models alone can’t catch structural fraud—they weren’t built for it. Without visibility into relational risk, you’re leaving gaps wide open.

Payers using Enterprise AI to operationalize graph analytics are seeing:

- Shorter investigation cycles (by up to 40%)

- Higher fraud recovery rates

- Better detection of emergent fraud structures (not just known ones)

Network-aware Enterprise AI turns fraud detection into a continuous intelligence function, not a reactive audit step.

It’s not just about catching fraud. It’s about outpacing it.

Document Forgery & Authentication: Bridging the NLP Gap

The New Frontier of Fraud: Forged Documents in the Age of AI

Fraud doesn’t just show up in claims. It hides in the documents that support them—medical records, invoices, billing statements, and referrals.

And with the rise of AI-driven forgery tools, tampered documentation has become faster, cheaper, and harder to detect.

Rule-based systems can’t keep up. They’re built to spot static formatting issues, not contextual inconsistencies. But fraudsters are now using generative tools to rewrite documents that look authentic at every level—from language to layout.

Why NLP and LLMs Are Now Core Infrastructure

Detecting forged documentation in unstructured data requires contextual understanding, not just keyword scans.

That’s where Natural Language Processing (NLP) and Large Language Models (LLMs) shift the game:

- NLP engines parse syntax, semantics, and document intent.

- LLMs process high-volume, high-variance text to surface anomalies in tone, structure, and sequence.

Together, they detect what rules can’t:

- Semantic mismatches between diagnoses and procedures.

- Style inconsistencies across otherwise “compliant” forms.

- Content divergence from past submissions by the same provider.

And because they learn from investigation feedback loops, these models continuously refine what “normal” looks like—building contextual intelligence into your fraud lifecycle.

Operational Gains: Where This Changes the Game

Enterprise payers that deploy NLP + LLM pipelines into their fraud workflows are seeing measurable outcomes:

- 20–30% increase in forgery detection accuracy

- 40% reduction in false positives on document audits

- 50%+ faster triage for suspected claims

These aren’t pilot results—they’re operational improvements across systems at scale. And when these models are paired with graph analytics, they surface both content-based and relationship-based fraud—closing the loop between documents and behavior.

Design Considerations: Where NLP Fails Without Guardrails

No model is neutral. NLP and LLMs introduce their own risks if left unsupervised:

- Bias in training data can misclassify non-standard language as suspicious.

- Privacy risks emerge if PHI is mishandled during model training.

- Integration friction surfaces if LLMs operate outside the governed Enterprise AI lifecycle.

That’s why explainability and auditability matter—especially for NLP systems running on sensitive, high-variance data. Enterprise-grade fraud systems don’t just detect forgery—they prove how and why a document was flagged.

When LLMs are deployed with feedback, transparency, and controls in place, they stop being experimental tools—and start acting like reliable analysts embedded in every claim review.

From Pattern Recognition to ROI: How Enterprise AI Detects Healthcare Fraud

Implementing Enterprise AI systems for fraud detection is a strategic investment. One that requires measurable returns to justify its cost and prove its effectiveness.

Yet, traditional performance metrics often fall short when applied to fraud detection. Metrics like overall accuracy or transaction processing speed provide a broad overview but fail to capture the nuances of detecting sophisticated fraud schemes.

To bridge this gap, organizations must establish clear, fraud-specific metrics that link detection performance directly to business outcomes.

Why Traditional Metrics Fall Short

In most enterprise systems, performance is measured by throughput, latency, or system uptime. But fraud detection doesn’t reward speed or volume—it rewards accuracy, context, and decision quality.

An Enterprise AI system can hit 98% accuracy and still fail. Why? Because most fraud schemes aren’t static—they evolve. And most traditional metrics don’t tell you how well your system detects adaptive behavior or relational risk.

Effective fraud detection requires a shift from operational KPIs to outcome-based metrics tied directly to investigative performance and financial impact.

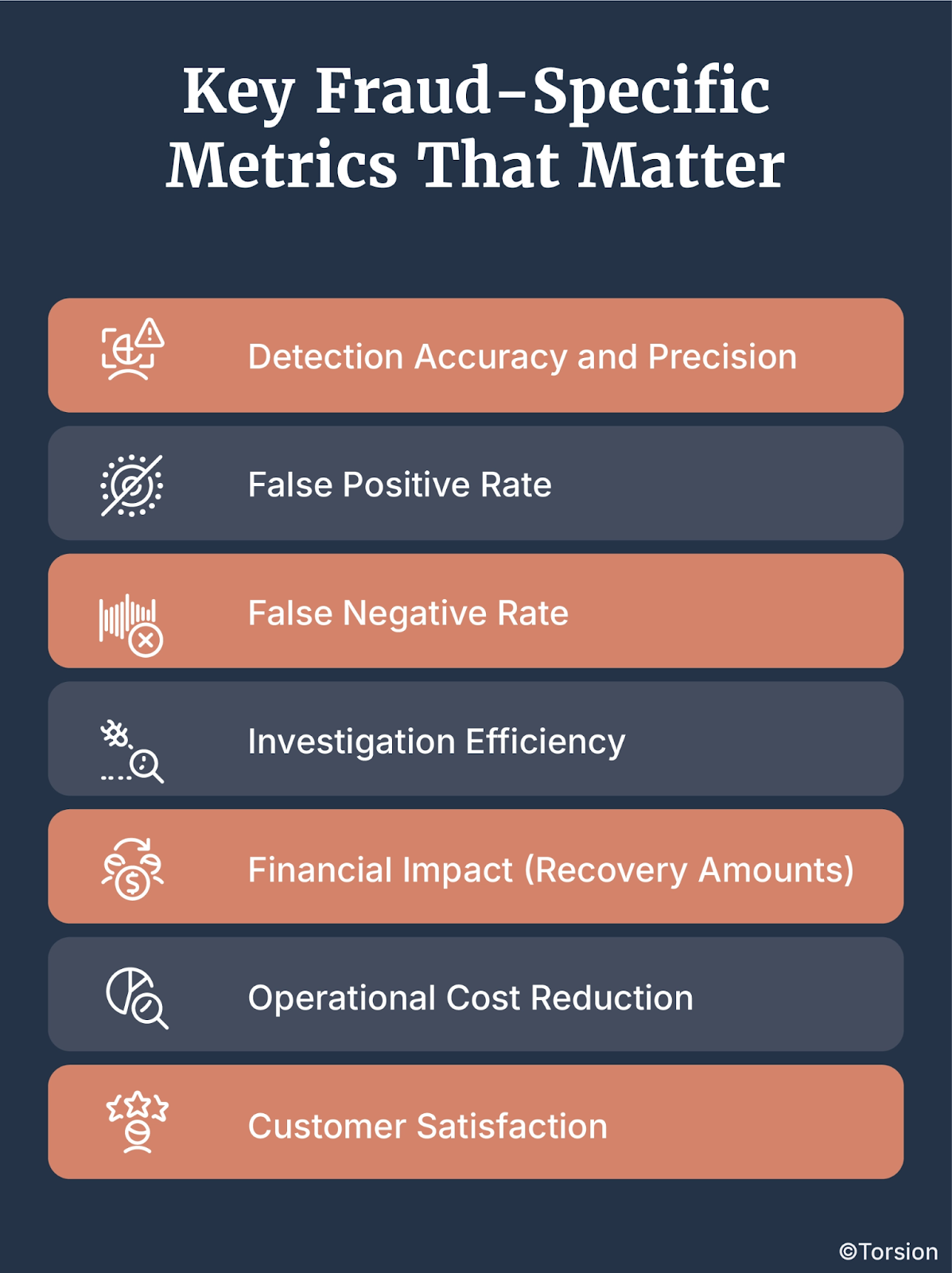

Key Fraud-Specific Metrics That Matter

When evaluating Enterprise AI systems for fraud detection, companies need to move beyond generic indicators and focus on metrics designed to assess true efficacy. These include:

- Detection Accuracy and Precision:

- This metric measures the system’s ability to correctly identify fraudulent activities while minimizing false positives.

- High precision indicates that the model effectively distinguishes between legitimate and fraudulent transactions, improving efficiency and reducing unnecessary investigations.

- This metric measures the system’s ability to correctly identify fraudulent activities while minimizing false positives.

- False Positive Rate:

- Represents the percentage of legitimate transactions incorrectly flagged as fraudulent.

- Reducing false positives is essential for minimizing operational costs, enhancing customer experience, and maintaining trust.

- For example, a 10% reduction in false positives can translate to significant cost savings, particularly in large-scale operations.

- Represents the percentage of legitimate transactions incorrectly flagged as fraudulent.

- False Negative Rate:

- Indicates the percentage of fraudulent transactions that go undetected.

- Minimizing false negatives is critical for preventing revenue leakage and ensuring robust fraud prevention.

- Undetected fraud can be far more damaging than false positives, as it directly translates to financial losses and reputational harm.

- Indicates the percentage of fraudulent transactions that go undetected.

- Investigation Efficiency:

- Measures the time and resources required to investigate flagged transactions.

- Improved efficiency leads to cost savings and allows investigators to focus on high-priority cases.

- Integrating graph analytics and LLMs can reduce investigation times by up to 40%, according to recent industry findings.

- Measures the time and resources required to investigate flagged transactions.

- Financial Impact (Recovery Amounts):

- Calculates the monetary value of fraud prevented or losses recovered through successful detection.

- This metric directly ties the performance of the Enterprise AI system to revenue protection and operational savings.

- For instance, organizations employing graph analytics and NLP systems have reported up to a 30% increase in recovery rates.

- Calculates the monetary value of fraud prevented or losses recovered through successful detection.

- Operational Cost Reduction:

- Evaluates the decrease in operational expenses, such as reduced manual reviews and lower administrative costs.

- Automated systems that effectively reduce false positives can significantly decrease labor costs.

- A streamlined fraud detection process often results in a 20–30% reduction in operational expenses.

- Evaluates the decrease in operational expenses, such as reduced manual reviews and lower administrative costs.

- Customer Satisfaction:

- Monitors user feedback, complaint rates, and overall satisfaction to ensure fraud detection efforts do not adversely affect the customer experience.

- Maintaining high customer satisfaction is particularly important in industries like healthcare, where service quality is closely tied to patient trust.

- Monitors user feedback, complaint rates, and overall satisfaction to ensure fraud detection efforts do not adversely affect the customer experience.

Aligning Metrics with Business Objectives

Establishing these metrics is not just about improving detection capabilities, it’s about linking those capabilities directly to business objectives and ROI.

Organizations that successfully define and measure fraud-specific metrics can:

- Demonstrate ROI: Clear metrics provide tangible evidence of the system’s value, making it easier to justify investment to stakeholders.

- Inform Strategic Decisions: Data-driven insights guide resource allocation, system upgrades, and process improvements.

- Enhance Compliance: Accurate metrics support regulatory compliance efforts by documenting the effectiveness of fraud prevention measures.

- Improve Customer Trust: Effective fraud detection enhances customer confidence, increasing loyalty and positive brand reputation.

The ultimate goal is to ensure that Enterprise AI systems are not only powerful but also measurable in their impact.

By tying detection performance to concrete business outcomes, organizations can make more informed decisions, justify their investments, and continuously refine their systems for even greater effectiveness.

Integrated Workflows for Fraud Teams: From Alert to Resolution

Where Detection Fails: The Workflow Gap

Most fraud detection platforms don’t fail at detection. They fail at handoffs.

AI models raise alerts. Then the case moves—often manually—to an investigator, on a different platform, with missing context and no prioritization logic.

Critical information gets dropped. Resolution gets delayed. Fraud risk compounds.

It’s not a model problem—it’s a workflow problem. And in most payer orgs, that workflow is fragmented by legacy systems, siloed tools, and reactive processes.

Building a Unified Fraud Intelligence Pipeline

Enterprise AI changes the game when it’s embedded across the entire fraud lifecycle—not just the detection layer.

Here’s what that looks like in a governed, integrated system:

- Automated Triage

Enterprise AI models score and rank alerts in real time, escalating high-risk cases and routing lower-priority items for secondary review. - Context-Aware Investigations

Systems pull in graph-based relational data, document-level analysis, claim history, and provider behavior into a single investigator view. - Adaptive Feedback Loops

Every resolution feeds back into the model, improving precision, reducing repeat alerts, and refining anomaly thresholds. - Decision Traceability

Every flagged case comes with an explainable output—why it was flagged, which features triggered the alert, and how it maps to prior fraud patterns.

Why Fragmentation Kills Outcomes

When detection, triage, and investigation are disconnected:

- Investigators are overloaded with low-priority alerts

- Model learning stalls due to feedback blind spots

- Resolution cycles stretch from days to weeks

- Regulatory risk increases—because decisions can’t be audited end-to-end

Integration isn’t just about productivity. It’s about trust, accuracy, and continuous system performance.

The Future: Real-Time Fraud Orchestration

In high-performing payer orgs, fraud teams now operate as real-time intelligence units with Enterprise AI delivering:

- Live case prioritization based on evolving risk signals

- Unified views of structured and unstructured data

- Seamless collaboration between models and human investigators

- Continuous improvement without sacrificing explainability

That’s the operational edge: fraud detection as a governed, adaptive, cross-functional workflow—not just a scoring engine with a spreadsheet export.

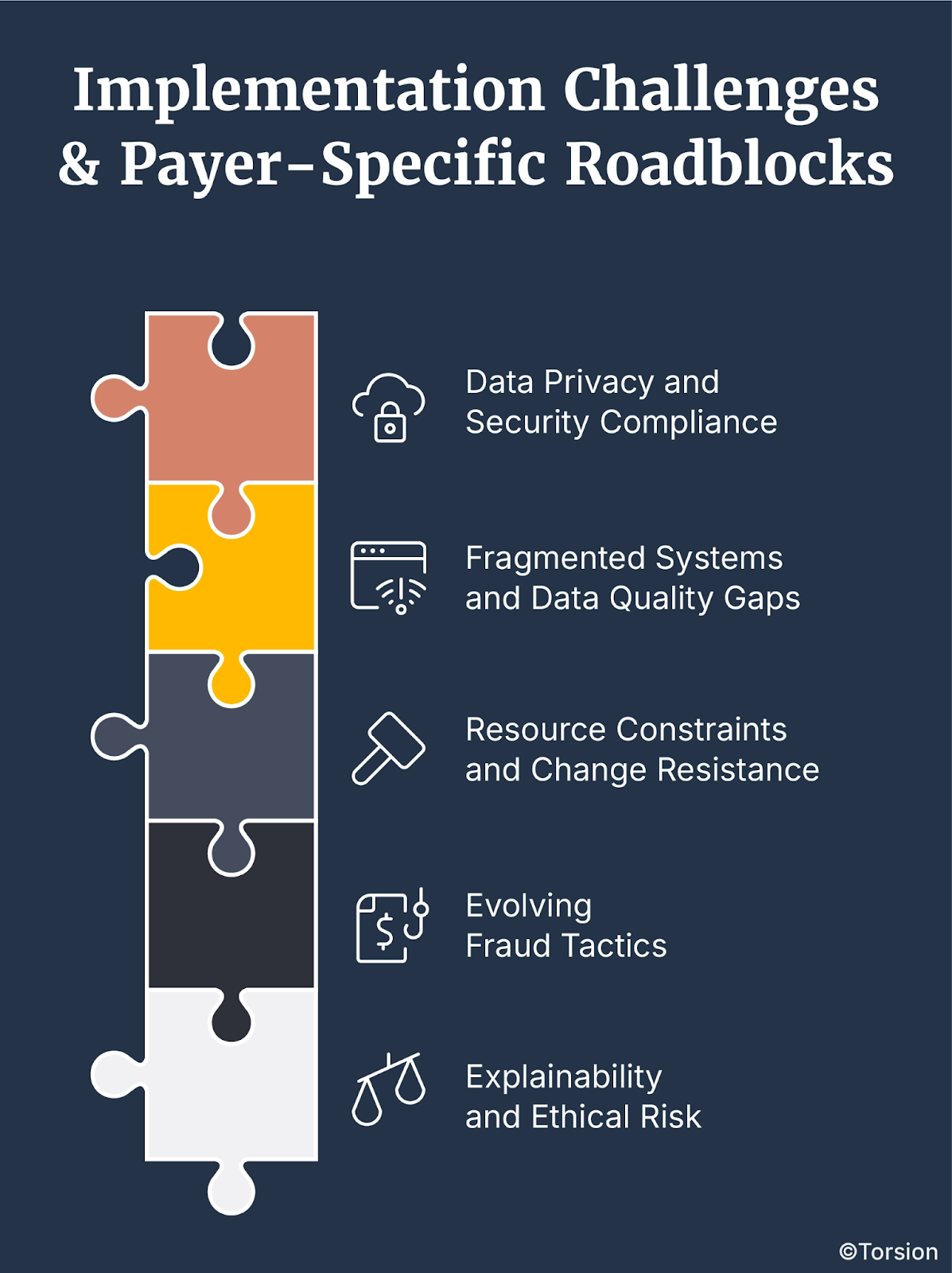

Implementation Challenges & Payer-Specific Roadblocks

Deploying Enterprise AI into a payer environment isn’t a plug-and-play exercise. Yes, there are legitimate headwinds—data fragmentation, regulatory pressure, limited internal bandwidth.

But leading organizations aren’t stuck in analysis paralysis. They’re operationalizing AI under real-world constraints. Here are the most common barriers—and how forward-looking teams are navigating them:

1. Data Privacy and Security Compliance

Healthcare data is among the most sensitive in the industry. Any model touching claims or patient documentation must comply with HIPAA, state-level privacy laws, and internal audit policies.

Strategy: Build in encryption, role-based access, and auditability from day one. Governed AI deployments are now table stakes for payer orgs under scrutiny.

2. Fragmented Systems and Data Quality Gaps

Payers often rely on legacy systems with inconsistent data formats and limited interoperability, making integration complex.

Strategy: Use orchestration layers and schema normalization pipelines to unify data across source systems. Enterprise AI can’t be effective if upstream infrastructure isn’t addressed.

3. Resource Constraints and Change Resistance

Enterprise AI isn’t just a tooling change—it’s an operating model shift. Budget approvals, cross-team alignment, and technical talent are all bottlenecks.

Strategy: Modular deployments are gaining traction—where high-impact use cases (like document forgery or provider collusion) are phased in first. This allows for incremental ROI while scaling internal capability.

4. Evolving Fraud Tactics

Fraud evolves faster than static detection systems. The moment you codify a rule, fraudsters move on.

Strategy: Use adaptive anomaly detection and continuous feedback loops to keep pace. Leading payers treat fraud detection as a learning system, not a static filter.

5. Explainability and Ethical Risk

Black-box AI poses legal, ethical, and reputational risk—especially in healthcare.

Strategy: Embed explainability tooling (SHAP, LIME, audit logs) into every model deployment. Regulators now expect transparency by design, not as an afterthought.

Payer-Specific Complexities: The Hidden Friction

Beyond the universal tech challenges, payers face industry-specific complexities:

- Shifting regulations that require flexible compliance frameworks

- Interfacing with provider networks that vary in maturity and digital readiness

- Proving ROI to finance and compliance simultaneously—a balancing act of impact and trust

These aren’t unsolvable. But they require a strategy—not just a system.

And that’s where Torsion delivers value: not just deploying models, but ensuring governed, lifecycle-aware systems that evolve with policy, data, and fraud patterns in real time.

The Path Forward: Building Explainable, Auditable Systems

As Enterprise AI systems become foundational to fraud detection in healthcare, the demand for transparency and accountability has only grown stronger. From regulators to patients, stakeholders now require clear insights into how AI-driven decisions are made.

Building systems that are not only effective but also explainable and auditable is no longer optional, it’s essential for compliance, trust, and long-term success.

Why Explainability is Now a Core Capability

In fraud detection, opaque models don’t just limit trust—they invite regulatory scrutiny, miss learning opportunities, and break the feedback loop.

Whether you’re defending a flagged claim to a provider, justifying a decision to internal audit, or meeting HIPAA compliance, black-box AI doesn’t hold up.

That’s why leading payers are designing explainability into the system—upfront.

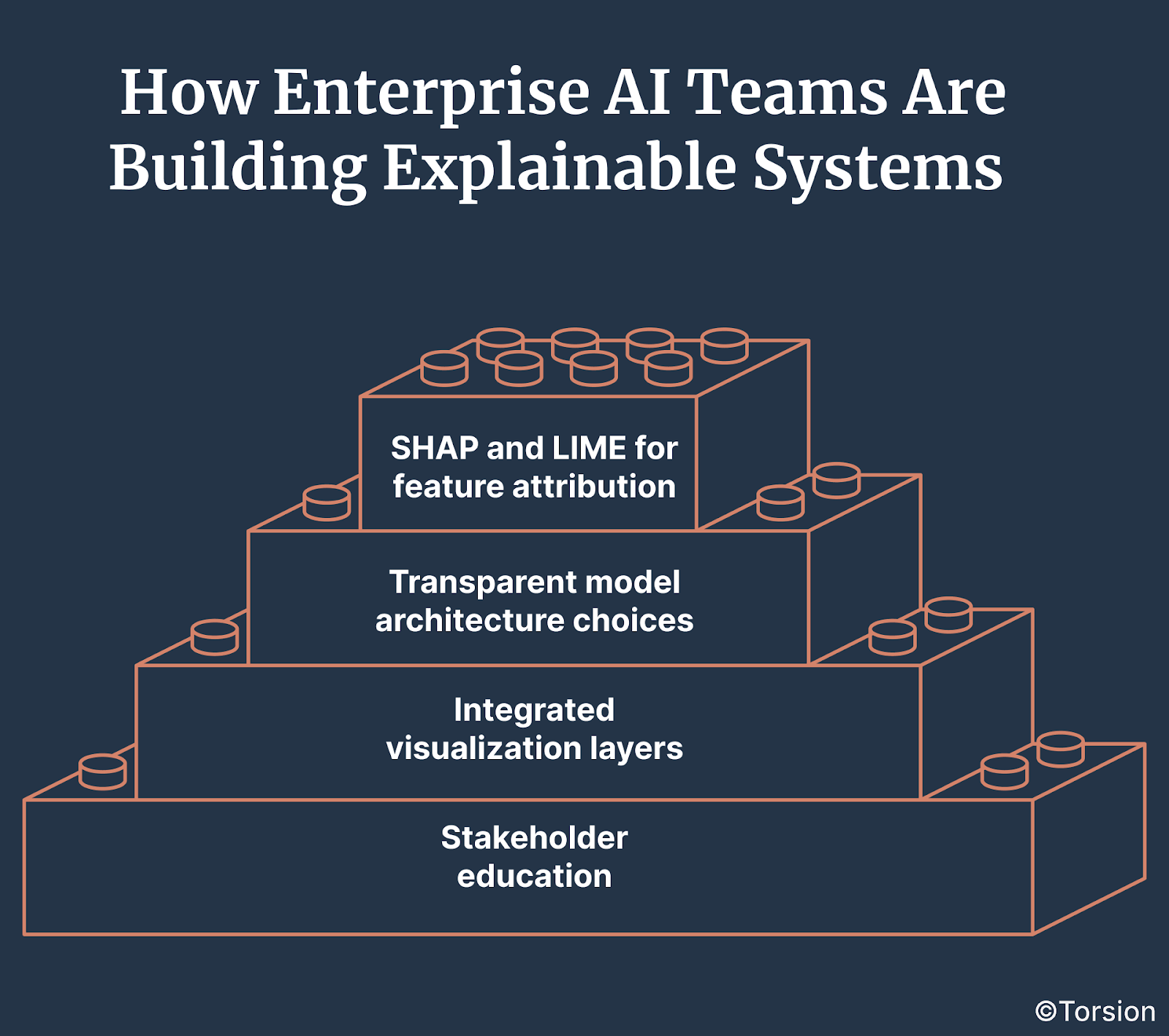

How Enterprise AI Teams Are Building Explainable Systems

There’s no one-size-fits-all approach, but there are patterns emerging in high-performing AI orgs:

- SHAP and LIME for feature attribution

These techniques clarify which input factors influenced a model’s output without revealing protected data. - Transparent model architecture choices

Where feasible, teams use interpretable models (e.g., decision trees, regression) for use cases where auditability outweighs accuracy gains. - Integrated visualization layers

Heatmaps, decision paths, and causal graphs give investigators intuitive views into how fraud decisions were made. - Stakeholder education

Workshops, explainability guides, and training programs ensure investigators, compliance leads, and execs can interpret outputs correctly and confidently.

Auditability: The Foundation for Trust and Scale

Explainability answers what happened. Auditability answers how, when, by whom, and under what system conditions.

Auditability requires:

- Complete logging of inputs, model versions, and decisions

- Access controls and usage tracking

- Internal + external audit readiness with standardized reporting

- Ongoing monitoring for concept drift, bias, and anomalous system behavior

Balancing Transparency and Performance

Highly interpretable models may not always deliver the best fraud detection performance. But sacrificing explainability entirely is no longer an option.

Leading orgs are adopting hybrid approaches:

- Using interpretable models for early-stage filtering

- Leveraging deep models for nuanced decisions—but pairing them with explanation wrappers

- Running shadow models during audits to validate decisions

Flexibility is key: the model stack must evolve with fraud patterns and with regulatory pressure.

What “Good” Looks Like: Enterprise AI with Governance Built In

The north star for fraud teams isn’t just accuracy—it’s governed adaptability:

- Can your models adapt to new patterns while staying explainable?

- Can your decisions stand up to audit three quarters from now?

- Can your system learn while still justifying every output?

When the answer is yes, fraud detection stops being reactive—and starts becoming a continuous intelligence loop with operational, financial, and compliance ROI.

That’s the future. And with the right infrastructure, it’s already happening.

Fraud Detection Systems Must Evolve or Fail

The landscape of fraud detection is rapidly evolving. As fraudulent schemes grow increasingly sophisticated, the tools and systems designed to counter them must keep pace—or risk becoming obsolete.

Organizations investing in Enterprise AI systems for fraud detection must recognize that innovation alone is not enough.

Success hinges on implementing technologies that are not only powerful but also explainable, auditable, and continuously adaptive.

Know more at – https://torsion.ai/contact-us/