Key Takeaways

- Cloud-first LLMs break under rural constraints

- Edge-optimized LLMs work without stable internet

- Legacy EHRs require semantic wrappers, not APIs

- Federated learning enables shared intelligence without centralizing data

- Rural-first deployment reduces disruption and drives fast ROI

A nurse is clicking through four different windows just to log a flu shot.

The Wi-Fi blips again. The EHR freezes. She sighs, reaches for the fax machine, and starts over.

Outside, there’s a line of patients. One doc. No scribe. Just another Tuesday at a rural clinic in Nebraska.

Now jump coasts.

A hospital in Boston is testing an LLM that generates denial letters, summarizes notes, and routes appeals all in real time, wrapped in APIs, priced by the token. The contrast is frustrating and even absurd.

Same country. Same reimbursement systems. But the tools? Built for two different planets.

Rural clinics aren’t slow adopters. They’re trapped by infrastructure.

Old systems. Low budgets. Spotty bandwidth. The average EHR setup in these places predates the iPhone. And still, we act like “just plug in the Enterprise AI” is a plan.

The reality is rural providers are the ones who actually need it most.

The burnout’s real. Documentation eats hours. There’s no backup when someone’s out sick.

Enterprise AI isn’t a luxury out here. It’s survival tech. But only if it’s designed for local hardware, offline fallback, and workflows that don’t assume six engineers on staff.

So we keep coming back to this: what if we built models that run where the bandwidth’s bad? What if we stopped building for benchmarks and started designing for need?

That’s what custom LLMs make possible. And it changes the game for rural care.

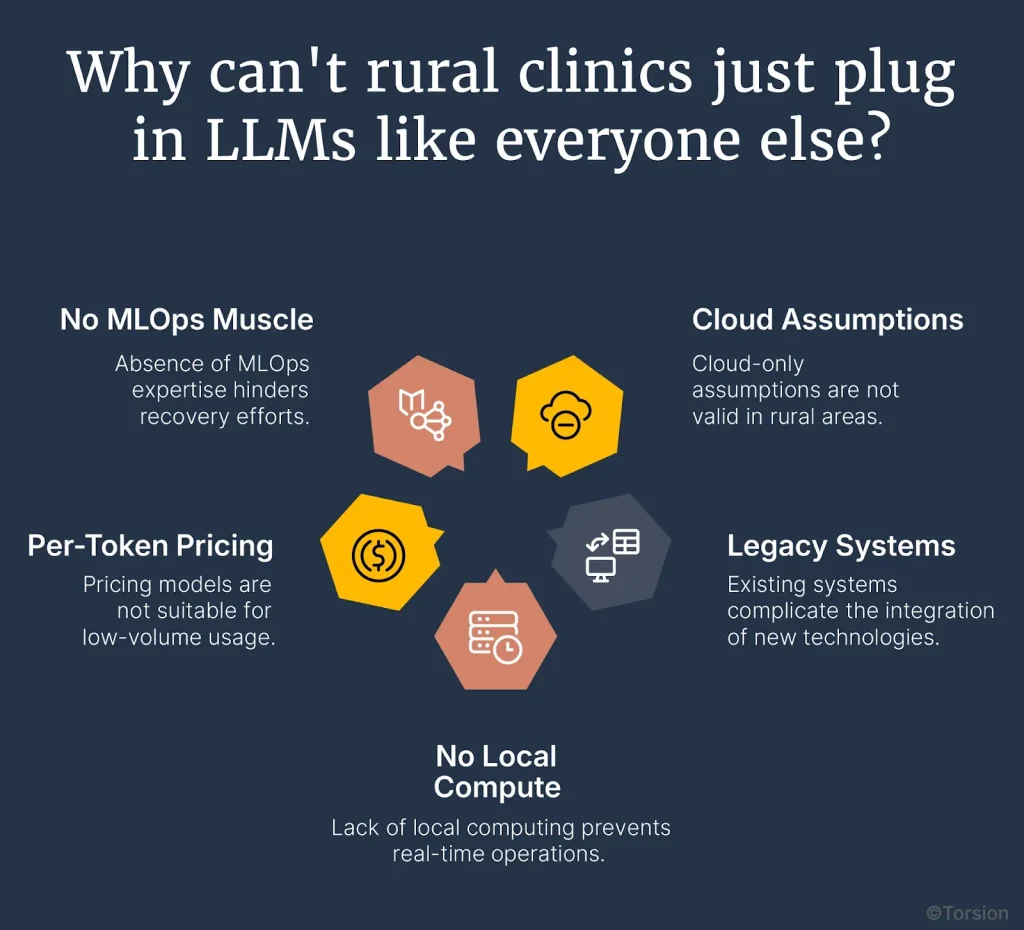

Why Can’t Rural Clinics Just Plug in LLMs Like Everyone Else?

You’re at a 40-bed hospital in rural Kansas. Connectivity drops during storms. The EHR was last updated God knows when and the closest ML engineer is two counties away.

Someone hands you a Stanford-built LLM deployment guide. Step one: connect to a stable API.

That’s where it breaks. Not because the clinic’s unwilling. Because the entire LLM stack assumes an operating environment that doesn’t exist here.

Cloud-Only Assumptions Don’t Hold

Most LLMs today, whether served via API or deployed from open-weight checkpoints, require stable, low-latency internet. But rural connectivity isn’t just slow. It’s unreliable. Mid-session timeouts, complete drop-offs, and latency spikes make cloud inference a liability. These aren’t one-off issues. They’re embedded in the operating environment.

If you can’t guarantee that a model call completes within 300ms consistently, you can’t rely on that model to drive real-time documentation, triage, or referral logic. Period.

Legacy Systems Break Integration Logic

Enterprise AI systems depend on structured data access. But many rural clinics still rely on EHRs that predate FHIR—and barely support HL7. Without a stable interface to retrieve clinical notes, lab results, or patient history, you lose context injection. You lose prompt precision. You lose output reliability.

Even when data exists, it’s siloed. Files live on shared drives. Case notes are PDF scans. Structured data may as well be handwritten. That kills any hope of retrieval-augmented generation or session-state persistence—core capabilities for clinical-grade LLM deployments.

No Local Compute, No Real-Time Ops

Deploying a 13B or even 7B model locally? Not an option. Most rural sites don’t have the GPU capacity. Even CPU-bound inference gets dicey—underpowered servers throttle under moderate load. That means your choices are limited: offload to the cloud and risk instability, or deploy quantized, distilled models that actually fit the local hardware.

But here’s the catch: the minute you cut model size, you impact fluency, factual grounding, and reasoning depth. So now you’re not just tuning prompts—you’re redesigning the model-policy alignment layer to prevent hallucinations under constrained inference.

Per-Token Pricing Models Break at Low Volume

Most LLM APIs are priced per thousand tokens. That works at scale. It doesn’t work in low-volume, high-context environments. A single multi-turn interaction, triaging a chronic condition or summarizing an appeal, can hit 6,000–10,000 tokens easily. Multiply that by 30–40 patients per day, and even a small clinic sees costs balloon into unsustainable territory.

They don’t need batch-scale economics. They need workload-aware routing, semantic caching, and offline-first fallback. Today’s commercial pricing structures weren’t designed with rural healthcare realities in mind.

No MLOps Muscle Means No Recovery Path

There’s no prompt engineer on staff. No retraining cycles. No A/B testing infrastructure. No human-in-the-loop pipeline to validate outputs before they hit the EHR.

So when something breaks—when a model starts generating off-policy language or loses grounding—there’s no escalation plan. No safety net. Just degraded trust and another feature that gets turned off.

Where does NLP actually move the needle in rural care?

So the commercial stack doesn’t fit. Fine. But throw it out entirely? Not a chance. Because buried under all that complexity is a huge opportunity if you scope it right.

Language models don’t need to do everything. They just need to solve the specific bottlenecks rural clinics hit every day: documentation overload, slow triage, inaccessible risk signals, and referral uncertainty. These aren’t theoretical pain points. They’re the backbone of care in low-resource settings. And they’re exactly where custom LLMs outperform.

Clinical documentation: The most expensive copy-paste job in medicine

In rural settings, documentation is a tax on already-thin capacity. Providers spend up to 4 hours a day manually charting, coding, and summarizing. That’s not just burnout—that’s delayed billing, inconsistent quality, and compliance exposure.

Fine-tuned language models can prefill notes, suggest CPT/ICD codes, and synthesize visit summaries from structured vitals, transcripts, and prior notes. The goal isn’t to eliminate documentation. It’s to automate the 80% that doesn’t require human nuance.

The lift? 30–40% less time per note, consistent coding accuracy, and fewer downstream denials. That’s not fluff. That’s cash flow and chart hygiene.

Telemedicine triage: When one nurse manages three counties

Triage is especially critical in rural care, where distance is measured in hours. Patients submit vague symptoms through portals or voicemail. Providers have to decide: is this urgent? Or can it wait?

An LLM tuned on clinical intake patterns can do the first pass: normalize symptoms, extract red flags, and recommend escalation paths. No hallucinations. No diagnosis. Just structured pre-triage, routed to the right human with context.

In a low-staff, high-latency environment, that’s the difference between reactive care and proactive prioritization.

Unstructured risk: When your data is trapped in PDFs

Population health algorithms typically rely on structured claims or vitals data. But rural clinics are sitting on a different asset: decades of unstructured notes, scanned letters, and free-text assessments.

LLMs unlock that. They extract smoking history buried in a SOAP note. Flag a missed mammogram based on an intake form. Surface diabetes risk from diet logs tucked in the chart. It’s not about predictive modeling—it’s about visibility. NLP becomes a force multiplier for care coordination when structured data doesn’t exist.

Referral logic: When every specialist is two hours away

Specialist access is limited. But referrals aren’t binary, they’re judgment calls. A custom-tuned LLM can flag when guideline-based consults are likely necessary based on case similarity and protocol fit. Not decision-making. Decision support.

Over time, this improves referral quality, reduces unnecessary handoffs, and makes the most of limited downstream capacity.

What do custom LLMs look like when they’re built for rural reality?

We’ve already seen where NLP can deliver. The next question is: how do you actually build something that works in the field, not just the lab?

That’s where custom LLMs earn their place. Not because they’re flashy but because they’re functional, resilient, and scoped precisely to the environment they’re deployed in.

Can models run without reliable internet?

Yes, if you build them that way. Cloud-first design assumes uninterrupted, low-latency connectivity. But rural networks drop. That’s why edge deployment matters.

Custom LLMs can be distilled, quantized, and run entirely on local CPUs or modest GPUs. No streaming dependencies. No round-trips to the cloud just to autocomplete a SOAP note. Offline-first is mostly table stakes.

Can underpowered clinics run real models?

They can if you stop pretending every clinic needs GPT-4. A well-optimized 2B–3B model, trained on rural documentation patterns and scoped to narrow tasks, fits in under 4GB of RAM. No exotic hardware. No scaling headaches.

Inference latency stays low. Response quality stays usable. And ops don’t grind to a halt because your server racks can’t handle 30 billion parameters.

Do these models understand rural documentation?

Only if you train them right. Rural provider notes are different. Fewer words. More shorthand. Looser structure. Generic LLMs struggle with that.

Custom models trained on local language patterns including referrals, SOAP notes, even voicemail transcripts, adapt faster. They autocomplete more naturally, hallucinate less, and require fewer post-run corrections. That’s real value for time-starved clinicians.

Can economics actually work at a small scale?

Token billing breaks here. A clinic with 40 patients and multi-turn workflows hits volume caps fast. That’s why edge-deployed, license-based models make sense.

Fixed pricing. No surprise bills. And if you federate across multiple sites? Even better. One shared model, trained across the network, improving over time without ever shipping data upstream.