Key Takeaways

- Nurses lose up to 35% of their shift to documentation.

- Documentation, staffing, and med workflows are major burnout drivers.

- Model-driven tools can help nurses save up to 2.5 hours per shift.

- Smart scheduling reduces overtime and improves fairness.

- Tools must be embedded, explainable, and designed with nurses.

- Workflow redesign, not wellness, reduces burnout at the root.

Nurses Aren’t Burned Out. They’re Burned Through.

You’ve heard it said: “Nurses are burning out.”

Here’s the truth: they’re not burning out. They’re being burned through by legacy systems, brittle workflows, and tech that treats them as an afterthought.

Let’s strip the fluff. Nurses don’t need coaching on how to handle exhaustion. What they need is relief from being overtasked, under-supported, and structurally ignored.

What’s actually driving the drain?

- Time Poverty: Nurses lose up to 35% of their shift to documentation tasks, charting, correcting, and re-entering the same information across siloed systems.

- Cognitive Load: Medication workflows are still full of blind spots, missing vitals, clunky order checks, alerts that fire too late or too often.

- Schedule Volatility: Most rosters are built on yesterday’s averages. They miss acuity, fatigue, and fairness, leading to forced overtime, floating without support, and a mounting sense of organizational indifference.

And it’s not just a morale problem. It’s a balance-sheet issue:

- $6.3 billion: Estimated annual cost of nurse turnover in the U.S. alone.

- 2+ hours per nurse, per shift: Time that could be reclaimed with model-supported workflows.

- 25%: Proportion of preventable safety events tied to communication or coordination breakdowns, not individual mistakes.

This is clinical infrastructure debt, not a people problem.

Every overtime hour, retyped chart, ignored fatigue score is a red flag. And every system that fails to act on it is compounding risk — financial, operational, and ethical.

If burnout is showing up on your nursing floors, it means your system design is broken. That’s the leadership signal.

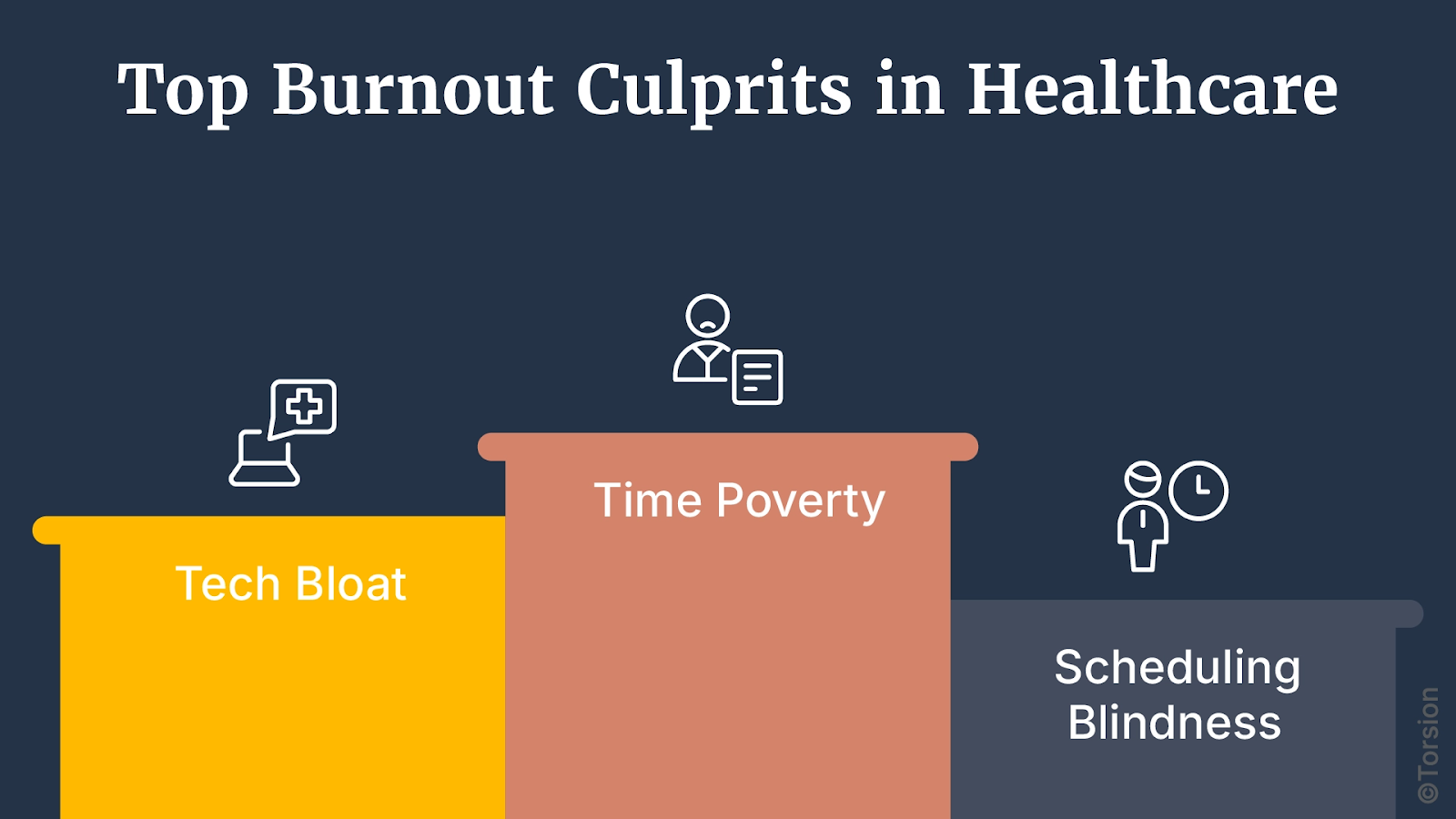

The Real Story of Burnout: Time Poverty, Tech Bloat, and Scheduling Blindness

Burnout follows a pattern and is an architecture of friction. In nursing, it shows up in three failure zones that consistently drag performance, safety, and morale.

1. Time Poverty: The Documentation Black Hole

Most nurses spend 20% to 35% of their shift documenting. That’s a time sink and a clinical compromise.

Vitals, assessments, handoffs, and incident logs are often scattered across multiple systems. Fields repeat. Interfaces differ. Data disappears.

The result? Rework becomes the norm. Nurses stay late to close charts. Critical context gets buried. Patient-facing time shrinks.

And this is a systems design failure.

2. Tech Bloat: Medication Workflows Built to Fail

Medication workflows are high-risk zones and still absurdly manual. Nurses reconcile orders, cross-check interactions, and verify dosing across tools that don’t surface the right context at the right time.

Alerts either fire too often or not at all. Lab values are late. Order sets lack nuance. And when predictive tools do exist, they often don’t learn from local outcomes or fit inside the workflow.

When the system doesn’t surface insight, it offloads the risk onto nurses.

3. Scheduling Blindness: When Rosters Ignore Reality

Shift assignments still rely on backward-looking rules and gut feel. Most don’t account for:

- Real-time acuity or patient churn

- Fatigue from back-to-back shifts

- Equity in float assignments

- Nurses’ own preference profiles

That’s why burnout spikes in unpredictable units. Why last-minute changes happen weekly. And why fairness is one of the most cited factors in early exit interviews.

Smart staffing doesn’t mean filling slots. It means matching capacity to real demand before things break.

The Hidden Pattern: These Aren’t Isolated Failures

Each of these frictions, documentation overload, med risk, and staffing chaos, looks like a separate operational issue. But they’re all symptoms of the same root cause:

We’ve layered digital tools on top of legacy environments without redesigning the operating system underneath.

The result? A high-friction workplace where nurses aren’t supported by intelligence, but undermined by inefficiency.

This is why Enterprise AI has to be an embedded architecture shift. Because until the system is built to adapt, nurses will keep burning through.

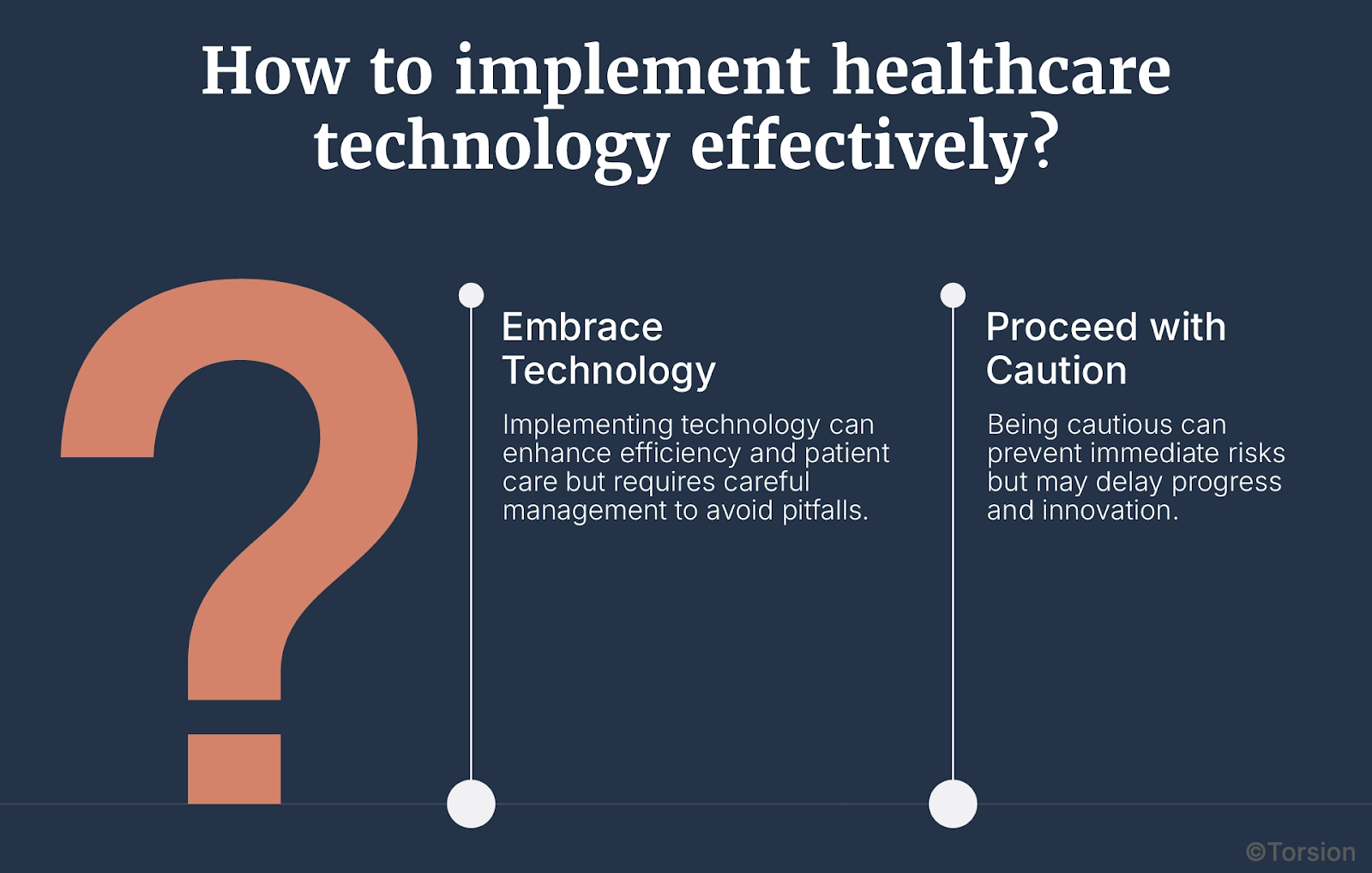

Promise vs. Pitfall: Where Enterprise AI Helps and Where It Fails

Enterprise AI is not a silver bullet. It can’t fix nursing burnout on its own. But when architected correctly, it can return hours, reduce risk, and restore clinical focus.

The Promise

In early deployments across documentation, scheduling, and med safety, we’re seeing real lift:

- Ambient documentation tools are reclaiming 2 to 2.5 hours per nurse per shift in some pilots.

- Predictive staffing models have reduced overtime hours by up to 15% while improving perceived fairness.

- Medication risk scoring systems are reducing adverse drug events by flagging deterioration before it happens.

These are not software upgrades. They’re infrastructure corrections.

When tools are embedded directly in workflows, trained on local data, and designed for clinical trust, they create slack in the system, slack that translates to more time at the bedside, less mental overload, and lower attrition.

The Pitfalls

But for every successful implementation, there are ten that stall. Why?

1. Pilot Purgatory

Too many systems launch point solutions that never scale beyond a single unit or workflow. They get trapped in endless evaluation cycles with no path to operational integration.

Why it happens:

- No cross-system orchestration

- No budget for MLOps or governance

- No feedback loop to guide iteration

2. Model Decay

Machine learning models don’t fail in the lab. They fail in the field silently.

Acuity patterns change. Documentation habits evolve. Drug interactions vary by population. Without retraining, most models degrade within 6–12 months.

Without drift monitoring and retraining cycles, intelligence becomes noise.

3. Trust Debt

Here’s the quiet killer: tools that look smart, but feel dumb.

When a nurse gets a pop-up they don’t understand, in a system they didn’t ask for, during a shift that’s already short-staffed, they skip it. And they should.

That skipped interaction is not resistance. It’s self-protection. The cognitive cost of using a tool they don’t trust is higher than doing the task manually.

Trust Debt is what accumulates when clinicians are asked to use tools that weren’t built with them, don’t explain themselves, and can’t be corrected in real time.

If it’s not explainable, not embedded, and not responsive, it’s not intelligent either. It’s just interface noise.

Leadership Blind Spot: Treating Enterprise AI as IT, Not Infrastructure

Let’s call out the strategic failure: most leadership teams still treat Enterprise AI like a software purchase. A tool to buy. A feature to pilot. A checkbox to tick.

That framing is why most Enterprise AI initiatives stall at the pilot stage or worse, become shelfware.

Because Enterprise AI is a structural shift.

You don’t plug intelligence into broken workflows. You redesign the workflows to accommodate intelligence.

Where the Misalignment Starts

Most Enterprise AI implementations are scoped like IT projects:

- Limited to one department

- Driven by technical feasibility, not operational need

- Managed by procurement, not transformation leads

But once deployed, they start breaking things upstream:

- Staffing logic no longer matches patient acuity

- Data pipelines expose years of inconsistency and technical debt

- Predictive tools call into question who owns the decision—and who’s liable

These are governance, workflow, and accountability challenges. And unless leaders are ready to shift the operating model around AI, the tools won’t stick.

What Enterprise AI Actually Touches

A serious Enterprise AI deployment rewires the logic of clinical operations:

- Staffing: From “who’s available” to “who’s optimal for this patient mix, right now”

- Documentation: From rigid form-fill to flexible, adaptive context capture

- Governance: From IT-led procurement to multi-stakeholder design teams with nursing, ethics, and compliance at the table

- Safety culture: From individual vigilance to system-level pattern recognition and decision support

And critically: the moment Enterprise AI influences a clinical decision, it becomes a quality-of-care issue, not a tech initiative.

That’s the blind spot: Enterprise AI is not a product. It’s an operating system shift. If your org isn’t ready for that, no tool will deliver lasting value.

This is where ROI doesn’t come from a model’s accuracy, but from leadership’s willingness to restructure how work gets done.

The Enterprise AI Maturity Model for Nursing

Enterprise AI doesn’t scale because of model performance. It scales when the system around it is built to learn, adapt, and relieve cognitive load in real time.

To move from pilot to permanence, healthcare leaders need a clear framework. A maturity model that maps not just features deployed, but friction removed.

Here’s that model, built specifically for nursing operations:

Level 1: Task Automation

Make it faster.

At this stage, health systems deploy point solutions to shave minutes off repetitive tasks. Think:

- Ambient documentation

- Basic rostering tools

- Inventory and supply tracking bots

The impact is visible but shallow. These tools reduce administrative time but rarely influence clinical decisions. Adoption is fragile. Drift is common.

Key Limitation: No feedback loop. These systems don’t learn, they execute.

Level 2: Embedded Decision Intelligence

Make it smarter.

This level integrates predictive logic into core workflows:

- Acuity-adjusted staffing recommendations

- Early warning systems for adverse events

- Risk-based medication protocols

- Dynamic task allocation based on real-time capacity

At Level 2, Enterprise AI starts to act as a clinical co-pilot, not just a clerical assistant.

Key Challenge: Trust and explainability. If the logic behind a recommendation isn’t clear, or if it creates rework, adoption stalls.

Level 3: Adaptive Systems with Loop Closure

Make it learn.

This is where real transformation happens.

- Predictive staffing tools adapt based on outcomes, not just forecasts

- Documentation tools tune their output based on nurse editing patterns

- Medication models refine recommendations based on patient recovery and local trends

- Drift monitoring triggers retraining without disrupting care

At Level 3, the system closes the loop between action, outcome, and system behavior.

Most health systems stall at Level 1 or 2. Why? Because they keep measuring “features launched” instead of “cognitive load reduced.”

If your models aren’t improving over time, they’re decaying. If your teams aren’t trusting the system more month over month, you’re scaling friction.

At the end of the day, success isn’t more dashboards. It’s fewer decisions made under duress.

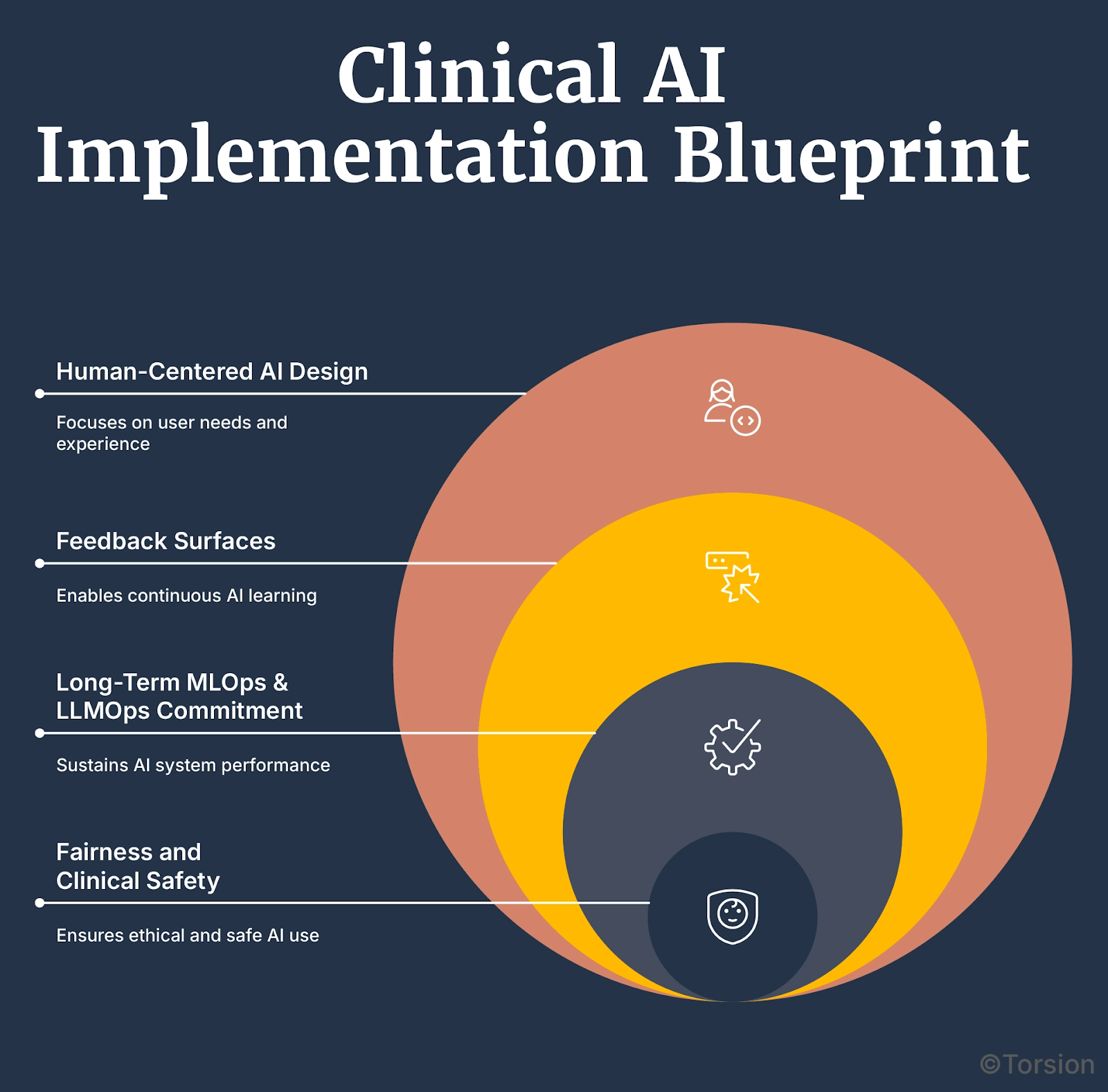

What Makes or Breaks Implementation: A Clinical Blueprint

If the first five sections explain why Enterprise AI matters in nursing, this one explains how to make it stick. Because most deployments fail due to broken implementation more than anything else.

Here’s the execution playbook that separates scalable systems from shelfware.

A. Human-Centered AI Design

Tools don’t work unless nurses trust them. And that trust isn’t earned through training videos, it’s earned through involvement.

- Co-design with nurses from Day 0—not just post-launch feedback

- Build with workflow fidelity—the tool must fit the tempo of a real shift, not a sandbox

- Prioritize explainability—if the model’s output can’t be justified at the bedside, it won’t be used

Don’t design for nurses. Design with them.

B. Feedback Surfaces That Let Systems Learn

Most Enterprise AI tools in hospitals run in open loop. They act, but they don’t learn.

Closed-loop systems require:

- Instrumentation: Capture how nurses interact with the tool (accept, ignore, override)

- Outcome linking: Tie decisions to downstream patient results

- Loop logic: Trigger model retraining based on drift or friction, not just calendar cycles

A “learning system” isn’t one that gets smarter in the lab. It’s one that improves in the wild.

C. Long-Term MLOps & LLMOps Commitment

No model stays accurate forever. Especially in nursing, where variables shift fast—protocols change, populations shift, workflows adapt.

What’s required:

- Real-time model monitoring

- Scheduled and conditional retraining pipelines

- Versioning and rollback safeguards

- Human-in-the-loop checkpoints for clinical-safety-sensitive actions

Intelligence without MLOps is like a plane without maintenance logs because it might fly, but not for long.

D. Fairness and Clinical Safety

Bias is a patient safety risk. Period.

Examples:

- Biased staffing models that over-float junior nurses

- Documentation score models that underrepresent certain language patterns

- Med alerts that misfire due to race-agnostic data design

Governance needs to:

- Audit performance by race, gender, language, and shift type

- Include nurse leaders, DEI officers, and patient safety reps in model review

- Flag drift in fairness metrics, not just accuracy

A system that scales inequity is just automated harm.

Operational ROI vs. Organizational Inertia

Let’s get honest: the biggest barrier to scaling Enterprise AI in nursing is institutional inertia.

Most systems already have the tools or access to them. What they don’t have is alignment.

- Between IT and nursing ops

- Between model governance and clinical leadership

- Between intent and execution

That gap costs real money, every day.

The Price of Delay

Let’s do the math:

- A burned-out nurse costs $60K–$90K in turnover and retraining alone.

- Nurses lose 2+ hours per shift to inefficiencies that are fixable with current tech

- Multiply that by 1.7M U.S. hospital-based RNs, and you’re looking at hundreds of millions in time waste alone

Meanwhile:

- Safety incidents tied to fatigue and coordination breakdowns are rising

- Litigation risk follows poor documentation and staffing gaps

- Quality scores are flatlining in under-resourced units

Enterprise AI is an opportunity cost recovery engine. Every quarter spent in pilot mode is a quarter you’re leaking talent, trust, and throughput.

Why It’s Not Scaling

Because leadership keeps trying to delegate a re-architecture to a procurement cycle.

You can’t patch a broken operating model with better UI. You can’t “vendor” your way out of systemic drag.

Real ROI comes from redesign:

- Interoperability that eliminates data double-entry

- Scheduling systems that reflect clinical reality, not HR convenience

- Embedded documentation tools that write notes the way nurses think

- Governance models that learn from friction, not just report it

The Decision Point

Leadership has two choices:

- Treat Enterprise AI like an IT feature and watch adoption stall

- Treat Enterprise AI as a clinical infrastructure mandate and rebuild accordingly

The difference? One drains the budget. The other restores capacity.

If you’re seeing burnout, turnover, or stagnation on the floor, it’s a systems problem. One that you now have the tools to solve.