Key Takeaways

- The failure point in Enterprise AI isn’t model design—it’s data infrastructure fragility.

- Enterprise AI systems can’t scale on pipelines that lack lineage, schema stability, and observability.

- Model-ready means semantically normalized, metadata-rich, and audit-traceable by default.

- Governance alone doesn’t cut it—engineering discipline must own pipeline reliability.

- CMS 2026 mandates aren’t a policy risk—they’re a systems design problem.

- Better models won’t fix bad joins, stale feeds, or unindexed PHI.

The biggest failure in healthcare isn’t model accuracy. It’s infrastructure fragility.

Payers are spending billions implementing Enterprise AI systems, from predictive fraud detection to GenAI-powered prior authorization. But the real bottleneck isn’t algorithmic performance. It’s data architecture.

Each year, the industry wastes over $30 billion not because models are underpowered, but because they’re deployed on top of brittle, fragmented, and opaque data systems. The pipelines feeding these systems are error-prone, poorly versioned, and nearly impossible to scale—let alone audit.

This isn’t just a data problem. It’s a design failure at the system level.

Why It’s a 2024 Crisis, Not a 2030 One

The timing couldn’t be worse. With CMS mandates pushing for real-time interoperability and increased scrutiny on explainability, payers are under pressure to modernize.

But most organizations are still managing EHR and claims data through legacy pipelines that can’t support the responsiveness, traceability, or longitudinal consistency that LLMs and advanced decision systems require.

That gap between regulatory intent and operational capability is only growing wider.

You can’t deploy GenAI in a world where your data lineage dies in a flat file. And you can’t deliver real-time adjudication or fraud orchestration without a unified, semantically normalized data fabric.

Enterprise AI systems can’t thrive on duct-taped infrastructure.

The Model Isn’t the Problem

Tuning model weights won’t fix brittle schemas. Upgrading your LLM won’t explain away a missing timestamp. And no predictive pipeline can overcome dirty joins or phantom IDs.

The solution isn’t smarter models. It’s model-ready data systems—architected from the ground up to support real-time interoperability, semantic alignment, and explainability. That requires rethinking how data is collected, structured, governed, and surfaced—not just for a single use case, but across the full operational spectrum of Medicare Advantage, Medicaid, and Commercial lines.

Technical Architecture: How to Build Scalable Data Pipelines for Enterprise AI

Predictive modelling in healthcare is only as good as its data scaffolding. For healthcare payers working with Enterprise AI, the architecture that underpins data flow—how it’s collected, transformed, and structured—is the difference between scalable impact and system-wide fragility.

This section breaks down the core technical architecture principles needed to deliver model-ready data: data that is reliable, interoperable, explainable, and regulation-aligned from ingestion to inference.

A. Semantic Normalization & Schema Design

Inconsistent coding, redundant values, and ambiguous identifiers are the Achilles heel of healthcare analytics. To move from reactive data cleansing to proactive data readiness, semantic normalization must be embedded into architectural design.

- Standardized Vocabularies: Adopt LOINC, SNOMED CT, and RxNorm to harmonize lab results, diagnoses, and medication data. This ensures interpretability across different systems and facilitates downstream model integration.

- NLP-Assisted Codification: Use Natural Language Processing (NLP) to extract structured representations from unstructured clinical text—progress notes, discharge summaries, or faxes—turning noisy input into structured features.

- Schema Strategy: Design schemas that are purpose-built for use cases like risk adjustment, fraud detection, or prior authorization—with native support for crosswalking between payer, provider, and plan-level datasets.

The goal: reduce preprocessing and increase model reliability through consistent, queryable semantics.

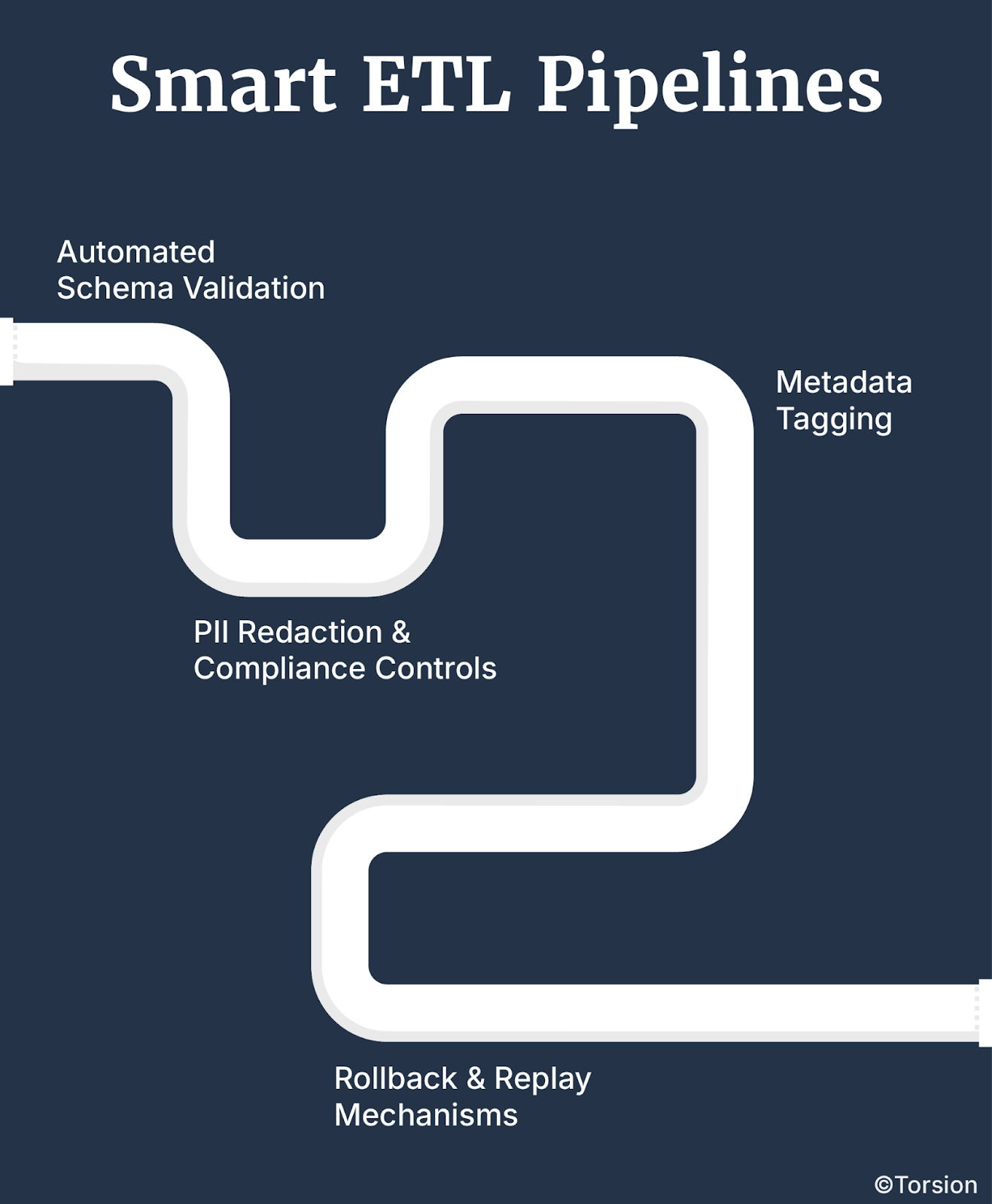

B. Smart ETL Pipelines

Forget brittle pipelines that break silently. Healthcare data pipelines need to be resilient, traceable, and smart enough to self-correct.

- Automated Schema Validation: Build ingestion workflows that check for schema compliance, version mismatches, and field-level anomalies before data hits downstream systems.

- PII Redaction & Compliance Controls: Integrate PHI and PII detection modules that automatically flag or redact sensitive information, ensuring HIPAA and CMS compliance at the point of entry.

- Metadata Tagging: Apply tags to every data object to track lineage, ingestion source, and transformation logic—enabling transparency for regulators and model auditing.

- Rollback & Replay Mechanisms: When pipelines fail, rollback must be instant, and replay must be traceable. Resilience is table stakes in production-grade systems.

The result: lower time-to-insight, reduced manual intervention, and pipelines that are model-aware by design.

C. Metadata-Driven Architecture

Metadata isn’t an accessory—it’s the operating system for scalable data infrastructure.

- Traceability from Ingestion to Inference: Every element in the pipeline—structured, unstructured, transformed, or synthesized—must carry context: who touched it, when, how, and why.

- Self-Scoring Datasets: Embed scoring logic into datasets to flag schema drift, freshness issues, and completeness thresholds. Data that can assess its own reliability reduces noise before it hits a model.

- Interoperability Enforcement: Use metadata policies to enforce standardization across claims, clinical, pharmacy, and SDoH domains—especially for multi-payer or multi-state datasets.

Good metadata systems don’t just describe your data—they govern how your system thinks about your data.

D. Unified Member Indexing & Longitudinal Data Views

Healthcare is multi-episodic. A single patient ID doesn’t cut it. Without member resolution, you’re modeling blind.

- Cross-Payer Identity Resolution: Build entity resolution systems that consolidate member identities across Medicaid, Medicare Advantage, and Commercial plans—eliminating duplication and fragmentation.

- Longitudinal Patient Views: Architect datasets that stitch together multi-touchpoint interactions over time—covering medical history, utilization, pre-auth activity, and social context.

- Model-Oriented Views: Don’t just create member-level data. Design longitudinal views that are structured around model consumption—supporting both real-time scoring and historical pattern analysis.

The payoff? Cohesive member intelligence that powers explainable, high-accuracy predictive modeling systems without sacrificing traceability.

Key Insight: Architecture Enables Intelligence

Building GenAI or predictive systems without a unified, metadata-rich, semantically aligned architecture is like constructing a skyscraper without a foundation.

Enterprise AI systems don’t fail because of bad math. They fail because of fragile data engineering.

To future-proof your infrastructure, build pipelines and schemas that are not just technically sound—but explainable, compliant, and natively aligned with how models think.

Data as a Product: Moving from Governance to Data Engineering

Most payers treat governance as a compliance function. But in an era where Enterprise AI is embedded into core workflows—claims adjudication, prior auth automation, fraud detection—that approach is no longer sufficient.

Governance alone won’t fix broken pipelines or explain why your models are underperforming.

To build data systems that scale, payers need to adopt a product mindset: treating data not as a static asset, but as an engineered product with reliability guarantees, defined ownership, and lifecycle management.

From Governance to Engineering Discipline

Compliance frameworks (CMS, HIPAA, OCR) ask the right question: “Can you explain what fed the model?” But traditional data governance functions often can’t answer that at scale.

Why? Because they’re reactive—focused on policy enforcement rather than proactive engineering design.

Enter Data Product Thinking.

This shift reframes governance not as oversight, but as technical accountability. It demands a system in which data has:

- Owners

- Performance metrics

- Lifecycle observability

- SLAs linked directly to downstream impact

In short, data becomes infrastructure, and infrastructure requires engineering.

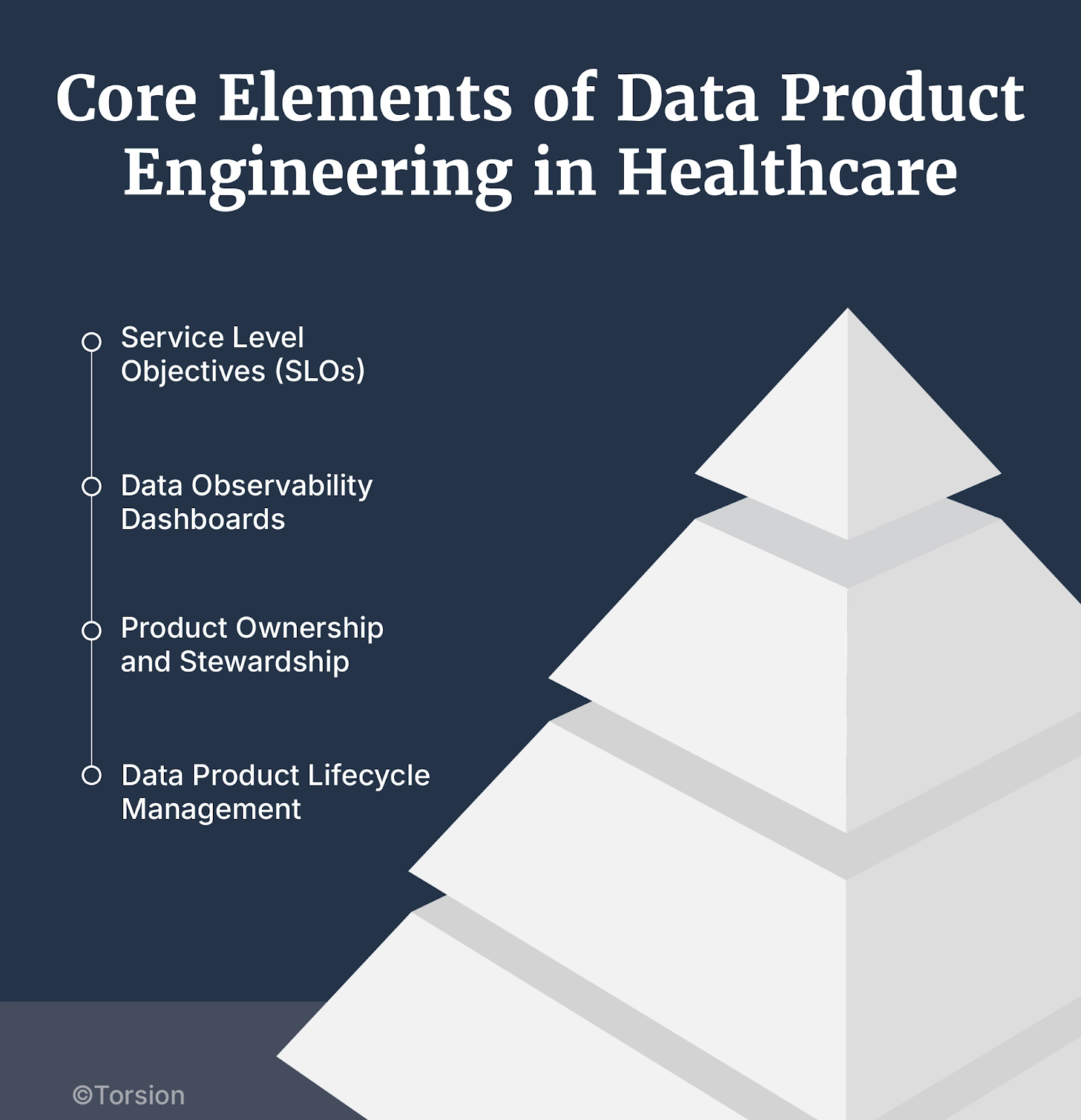

Core Elements of Data Product Engineering in Healthcare

Service Level Objectives (SLOs)

- Define SLOs not for uptime, but for model readiness—latency thresholds, freshness intervals, schema stability, and data completeness.

- Tie these SLOs to model performance KPIs: fraud recall, authorization turnaround time, cost recovery rate.

- If data isn’t reliable enough to support a production system, it’s not “product-grade” data.

Data Observability Dashboards

- Build systems that visualize data health in real time—including ingestion errors, drift detection, lineage gaps, and PII leakage.

- Use anomaly detection and trend analytics to preempt issues before they cascade into model failure.

- Observability isn’t just about dashboards—it’s about creating shared visibility between data engineers, compliance teams, and model owners.

Product Ownership and Stewardship

- Assign data product owners—engineers accountable not just for pipelines, but for the quality and traceability of their data products.

- Establish feedback loops with downstream users—fraud investigators, clinicians, ops analysts—to validate data usability.

- Create versioning protocols and release notes for datasets, just like code artifacts.

Data Product Lifecycle Management

- Treat data systems as evolving products with iterative improvements, depreciation plans, and migration roadmaps.

- Bake regulatory requirements into the lifecycle (e.g., CMS 2026 transparency mandates, OCR audit readiness).

- Engineer backwards compatibility to prevent pipeline disruptions as schemas evolve.

Key Insight: If You Can’t Engineer It, You Can’t Scale It

Most model failures don’t originate at the model. They originate in unversioned joins, undocumented transformations, stale data feeds, and governance teams that can’t see into the pipeline.

Enterprise AI succeeds when data engineering takes ownership, not just responsibility. That means building data systems with the same discipline, accountability, and rigor that’s expected of any other production system.

This is what separates innovation that scales from proof-of-concept purgatory.

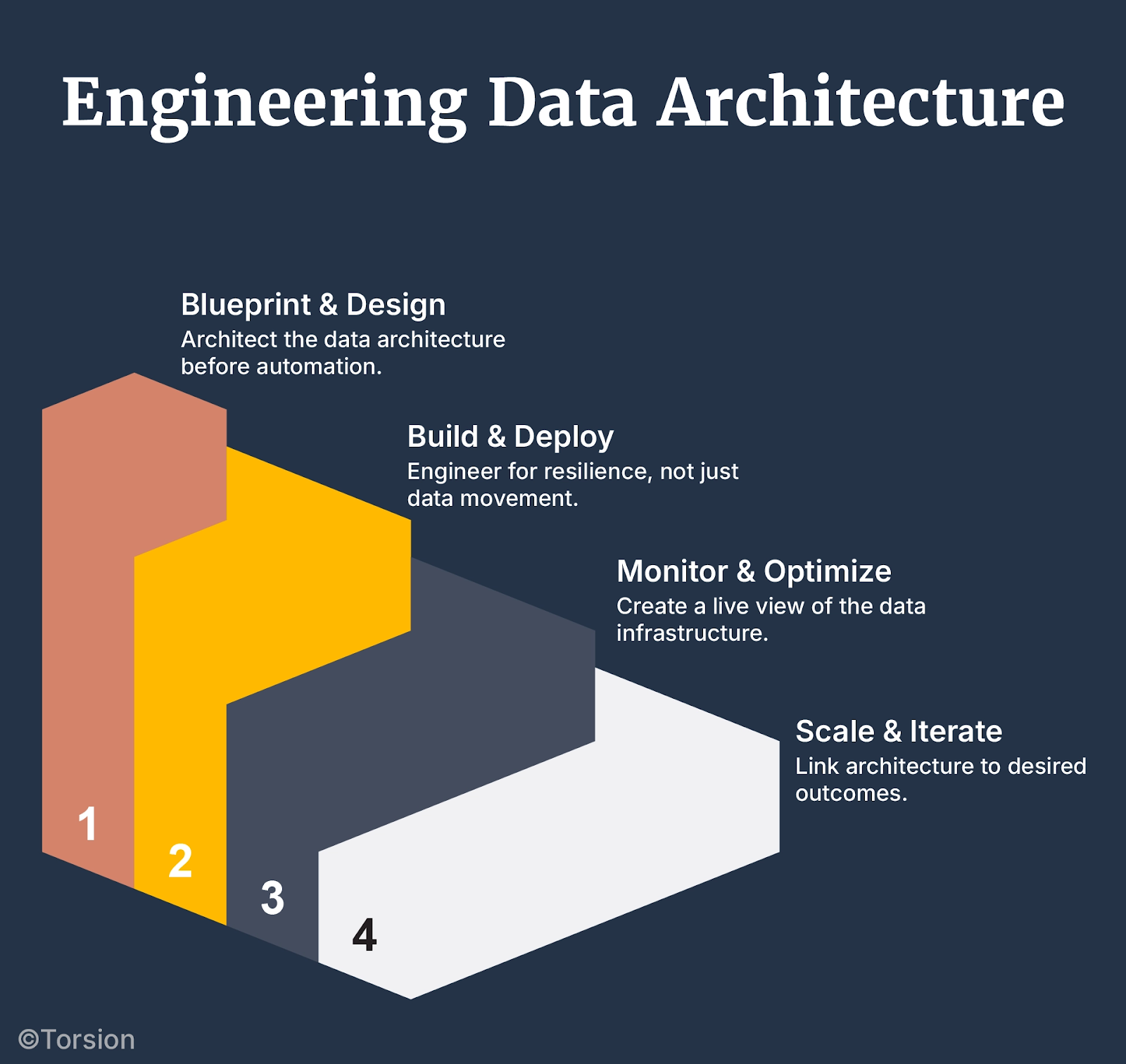

Implementation Roadmap: Engineering Your Data Architecture

Building reliable, interoperable, and explainable data systems doesn’t happen through sporadic tooling or incremental fixes. It requires a deliberate, systems-level architecture built with engineering discipline, regulatory foresight, and scalability in mind.

Here’s a step-by-step roadmap for healthcare payers ready to shift from fragmented pipelines to production-grade, model-ready infrastructure.

Step 1: Blueprint & Design — Architect Before You Automate

Start with architectural clarity. Retrofits don’t scale.

- Align with FHIR, USCDI, and HL7 Standards

Map your architecture against these standards—not just for regulatory alignment, but to enable seamless data exchange across provider networks, clearinghouses, and internal systems. - Establish Schema Definitions

Create canonical schemas for claims, EHRs, SDoH, pharmacy, and pre-auth data. Version every schema. Document every field. - Set Metadata Requirements Upfront

Require every data object to carry lineage, timestamps, ingestion source, and data classification tags (e.g. PHI, structured, synthetic). This is what will make your systems explainable and audit-ready.

Step 2: Build & Deploy — Engineer for Resilience, Not Just Movement

Build pipelines that are intelligent, self-healing, and modular.

- Smart ETL with Validation Checkpoints

Ingest with validation baked in. Auto-detect schema violations. Flag missing keys. Enable rollback. Pipelines should fail loudly, not silently. - Anomaly Detection as Default

Use statistical models to detect drift, outliers, and data quality degradation in-flight—not after it hits a dashboard. - Deploy Unified Member Indexing

Use ML-based entity resolution to consolidate patient and provider identities across Medicare Advantage, Medicaid, and Commercial. This step is non-negotiable for longitudinal modeling and cross-plan analytics. - Redaction & Privacy Guards at Ingestion

Apply PII/PHI detection before data hits storage. Tag it. Encrypt it. Audit it. CMS and OCR aren’t asking for “best effort.” They want defensibility.

Step 3: Monitor & Optimize — Create a Live View of Your Data Infrastructure

Operationalize observability. Treat your pipelines like production systems because they are.

- Implement Drift Monitoring Dashboards

Monitor schema stability, nulls, and missingness across datasets over time. Show your compliance team what your systems are doing—not just what they were supposed to do. - Track Model-Data Dependencies

Know which pipelines feed which models, and what happens when upstream schemas change. This is the glue that prevents silent model failure. - Log Transformations and Access

Every touchpoint—by people, processes, or models—should be logged and queryable. If you can’t trace a prediction back to its data source, your system isn’t explainable.

Step 4: Scale & Iterate — Link Architecture to Outcomes

Too many architecture projects stall at the MVP stage. To scale, you need feedback loops between data reliability and model performance.

- Align Data Improvements with Model KPIs

Instrument datasets so that improvements in quality (freshness, completeness, consistency) can be tied to improvements in fraud recall, prior auth turnaround time, or member engagement rates. - Version for Governance, Not Just Git

Schema versioning, metadata snapshots, and audit-ready change logs are your insurance policy when CMS, OCR, or internal auditors come knocking. - Continuously Monitor Compliance Readiness

Stay ahead of 2026–2027 CMS mandates. Run simulations. Audit yourself before they do. Build with defensibility, not just interoperability.

Best Practice: Don’t Just Integrate—Engineer

This roadmap isn’t about patching broken flows. It’s about engineering healthcare data systems like production software, with the same SLAs, quality gates, and traceability that govern your core claims stack.

Because when your data platform is built to be explainable, interoperable, and resilient by design, every downstream system—GenAI or otherwise—gets exponentially more reliable.

Why Payers Need Infrastructure-First Thinking

If you’re serious about operationalizing Enterprise AI, whether it’s fraud detection, prior auth automation, or GenAI-powered case summarization, you need to treat data systems as infrastructure, not as an afterthought.

This means:

- Architecting for lineage and traceability, not just ingestion.

- Designing for interoperability and compliance, not just movement.

- Building model-ready pipelines that are observable, explainable, and defensible—by default.

Stop trying to fix broken outcomes with better models. Start fixing the system that feeds them.

Invest in your schemas. Engineer your pipelines. Standardize your metadata. Because models don’t make systems scalable, architecture does.

The future of healthcare intelligence will be won by the organizations that treat their data infrastructure with the same rigor they treat their claims systems.

The next leap in payer transformation isn’t algorithmic, it’s architectural.